IBM used some unusual techniques in its integrated circuits, and one of the most visible is packaging them in square metal cans. I've been studying these chips recently, since there's not a lot of information about them. I opened up the large metal chip—1.5" on a side—from the token ring network board below. This chip turned out to be stranger and more interesting than I expected, combining analog circuitry, a custom microprocessor, and complex logic. The internal packaging was also unconventional: instead of the bond wires used by most manufacturers to connect the silicon die, IBM used a "flip-chip" technique, soldering the die upside down onto a ceramic substrate. Instead of pads, the chip had solder balls across its surface, giving it an unexpected layout and appearance. In the blog post, I discuss this chip in detail.

The token ring network was introduced by IBM in 1985,1 a local-area network technology that competed with Ethernet and other network systems. In a token ring network, the computers are wired in a ring, with each computer receiving packets from the previous computer and transmitting them to the next computer in the loop. To give a computer access to the network, a special three-byte token circulates in the ring. When a computer receives the token, it can transmit a network packet to the next computer in the ring. The packet travels around the ring until it comes back to the original computer. That computer discards the packet and sends out the token in its place, giving another computer a chance to transmit data. In comparison, an Ethernet network lets computers transmit at any time; if two transmit at the same time, the collision is detected and they try again a bit later. A token ring network had the advantage of avoiding collision, making it more deterministic and fair and providing better performance on a congested network.

IBM's use of square metal cans goes back to the early 1960s with IBM's SLT modules (Solid Logic Technology). Because IBM didn't think integrated circuits were mature enough at the time, they used small hybrid modules with a few transistors, diodes, and resistors mounted on a ceramic substrate. These half-inch-square SLT modules were packaged in an aluminum can for protection, giving IBM circuit boards a unique appearance. In the late 1960s, IBM moved to integrated circuits2 but they kept the ½" metal cans instead of the rectangular ceramic or epoxy packages used by other manufacturers. As integrated circuits required more pins, IBM increased the package size, leading to the bulky 1.5" package that I examined.

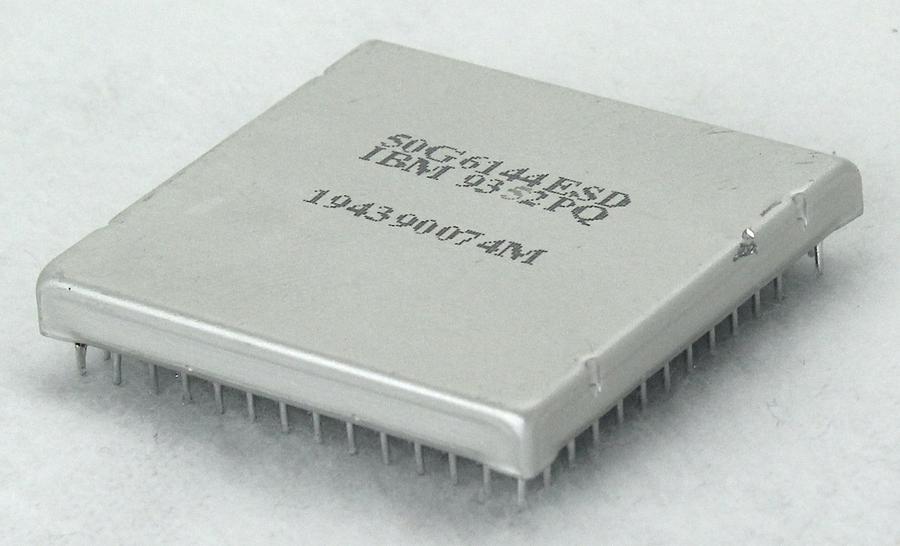

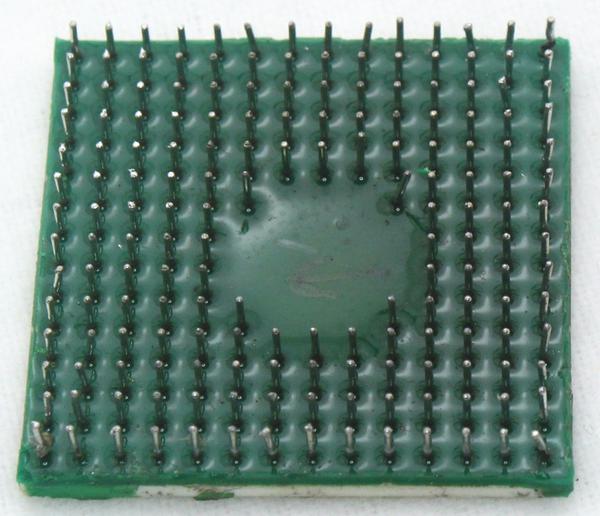

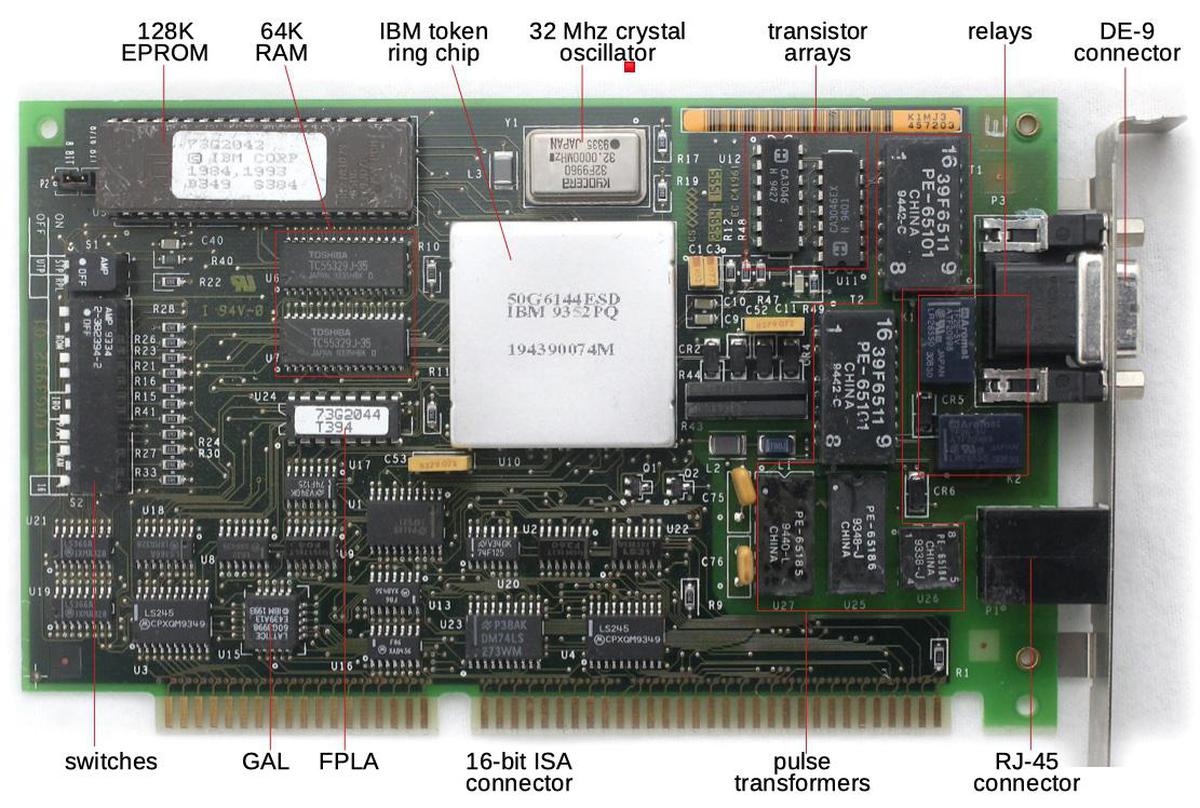

To examine the integrated circuit, I removed it from the board with a hot air gun. In the photo below, you can see the grid of pins underneath the chip. The chip is labeled with the part number is 50G6144. The "ESD" suffix indicates an electrostatic-sensitive device that can be damaged by static electricity and requires special handling. The next line, IBM 9352PQ, is a code for the manufacturing site. The final line, 194390074M, shows that the chip was manufactured in 1994 during the 39th week of the year.

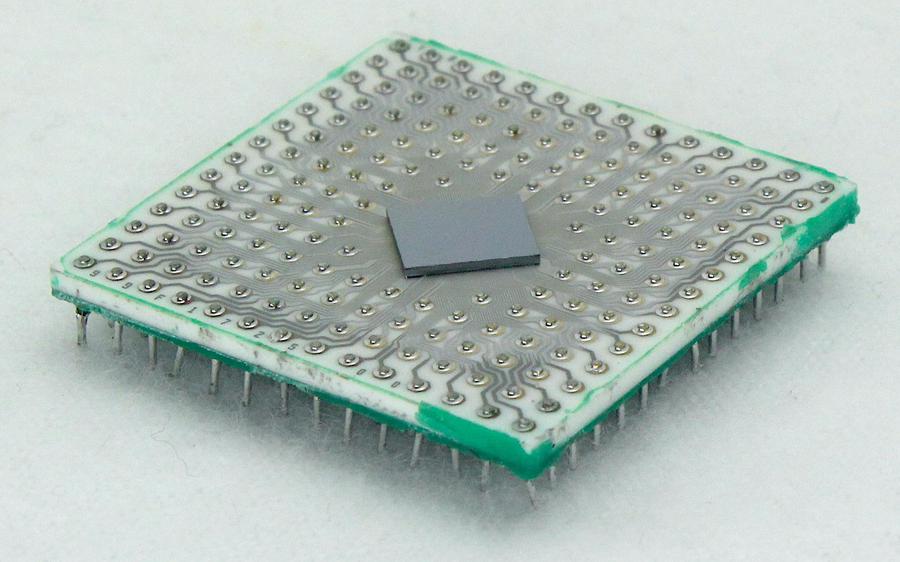

Cutting off the aluminum lid reveals the silicon die inside. The chip is mounted upside down as a flip chip, soldered directly to the connections on the ceramic substrate. Thus, you can't see the chip's circuitry, just the underside of the silicon die. IBM called this mounting technology controlled collapse chip connection or C4.3 (In comparison, most manufacturers mounted a silicon die right side up and connected it to the pins with tiny bond wires.) Tiny printed-circuit traces connect the module's 175 pins to the die.

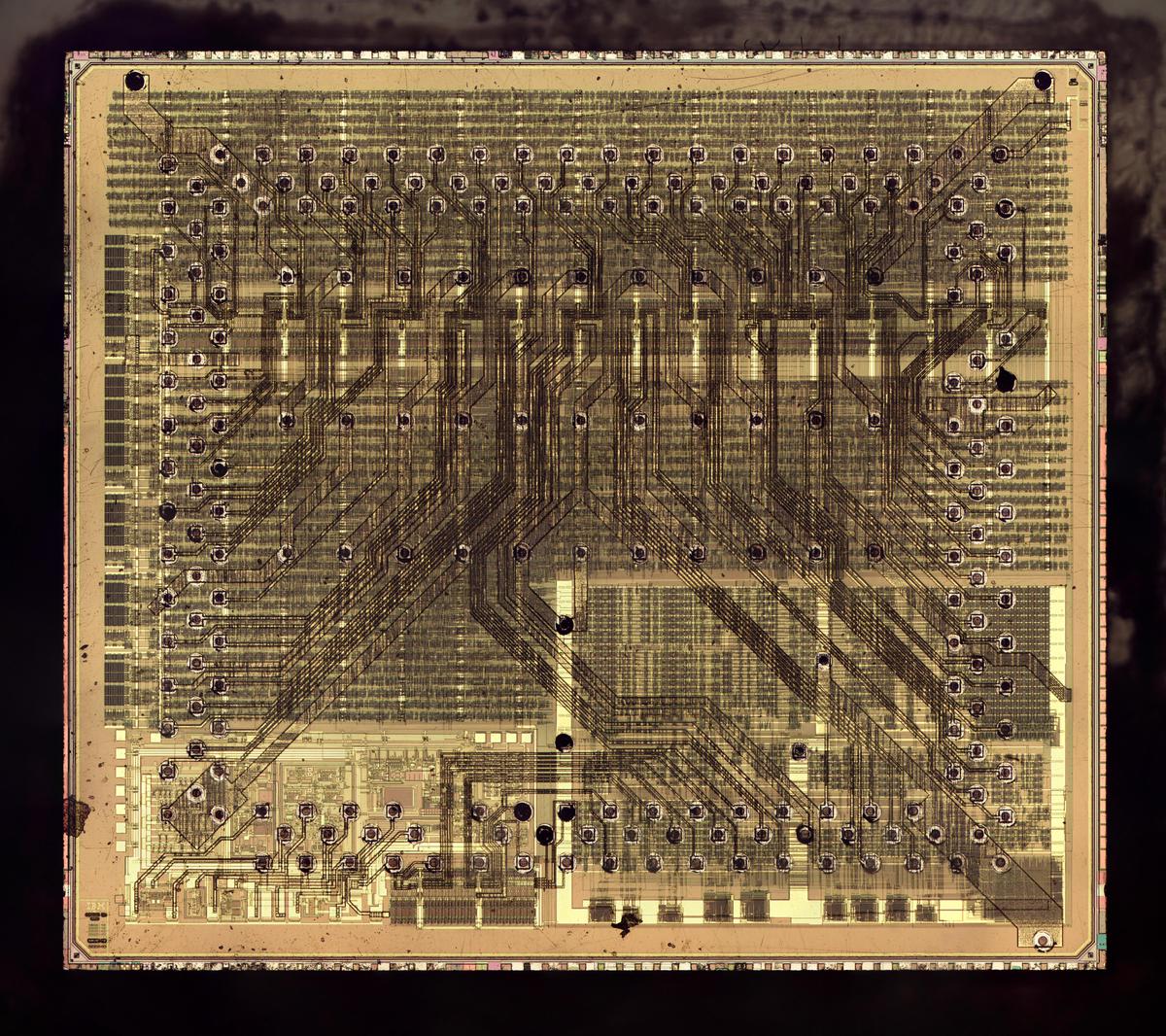

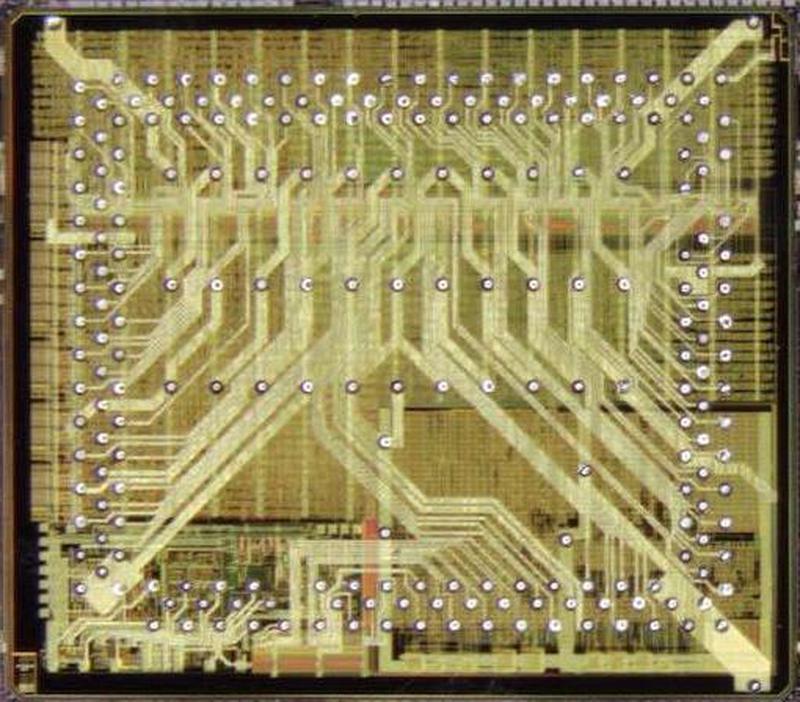

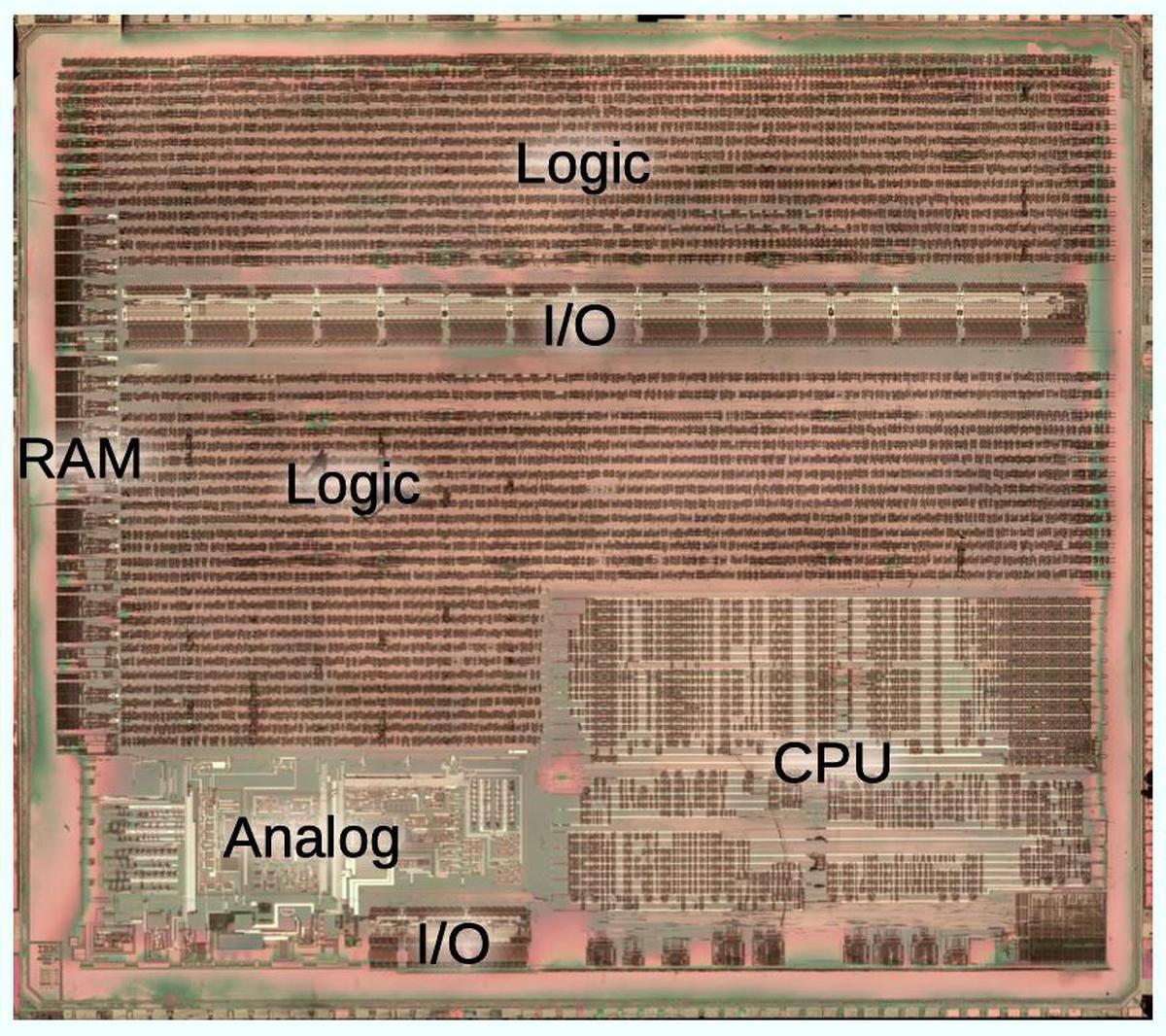

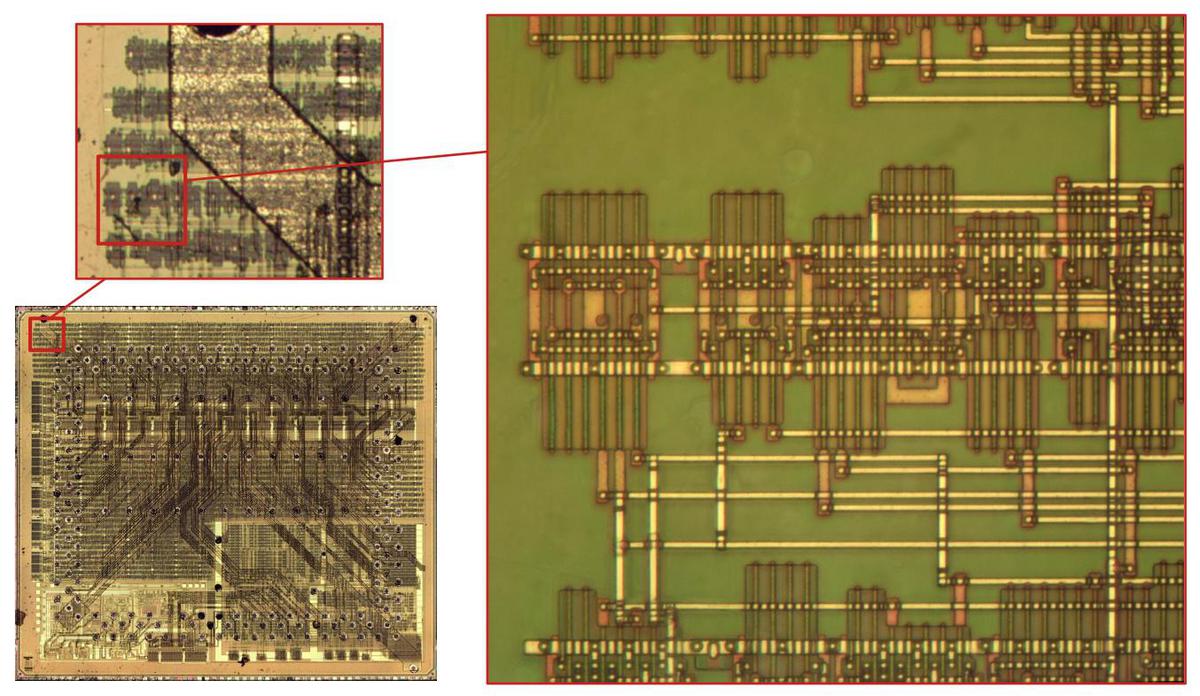

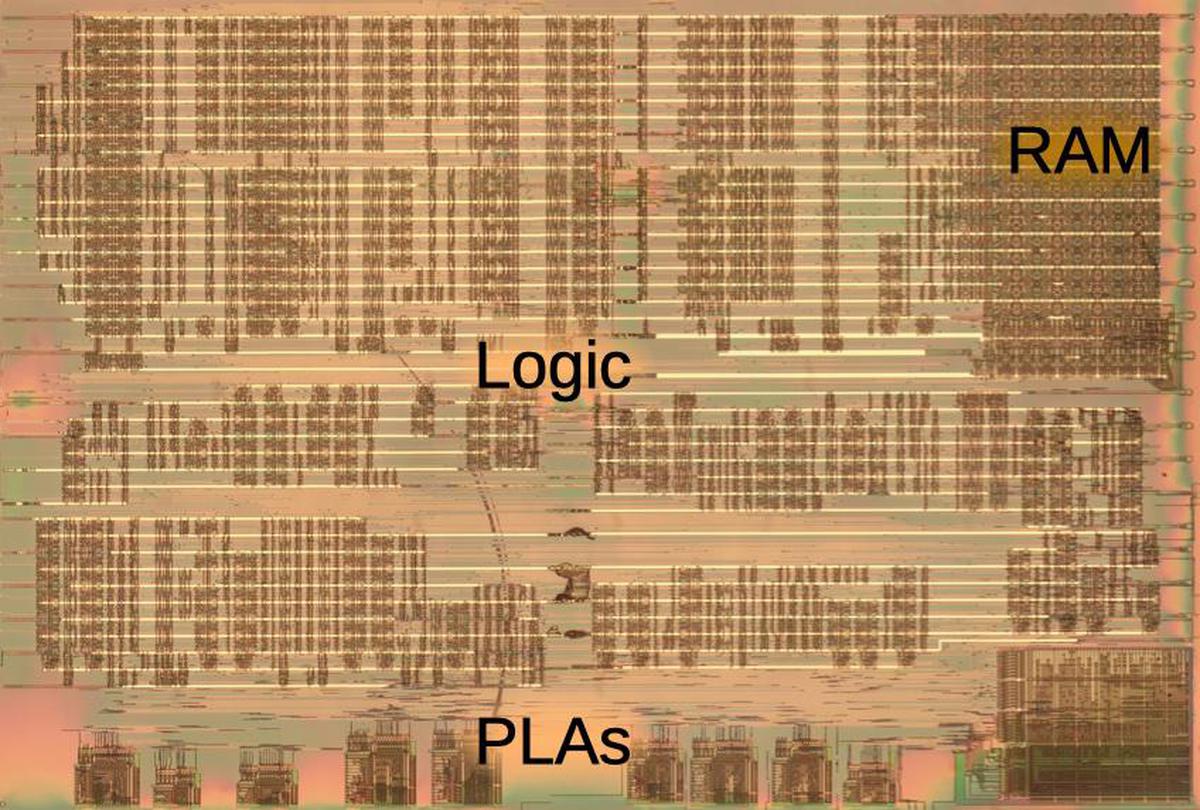

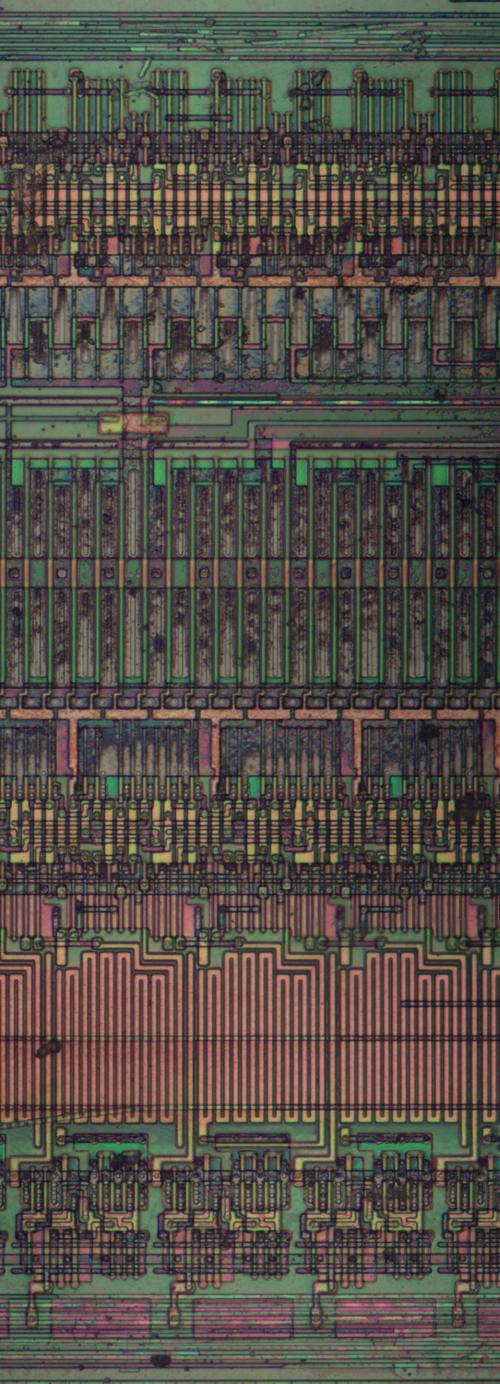

I removed the die from the substrate with the hot-air gun and then dissolved the solder balls with a mixture of hydrogen peroxide and vinegar. By taking numerous photos with a metallurgical microscope, I created the die photo below. The black circles on the die are the positions of the solder balls, more irregular than you might expect. They are not around the edge of the die (as with bond pads), but overlap the circuitry. The chip is fairly large, about 9×7.9 mm, with features of about 1µm. Note the horizontal rows of circuitry; these are standard cells, which I will discuss below.

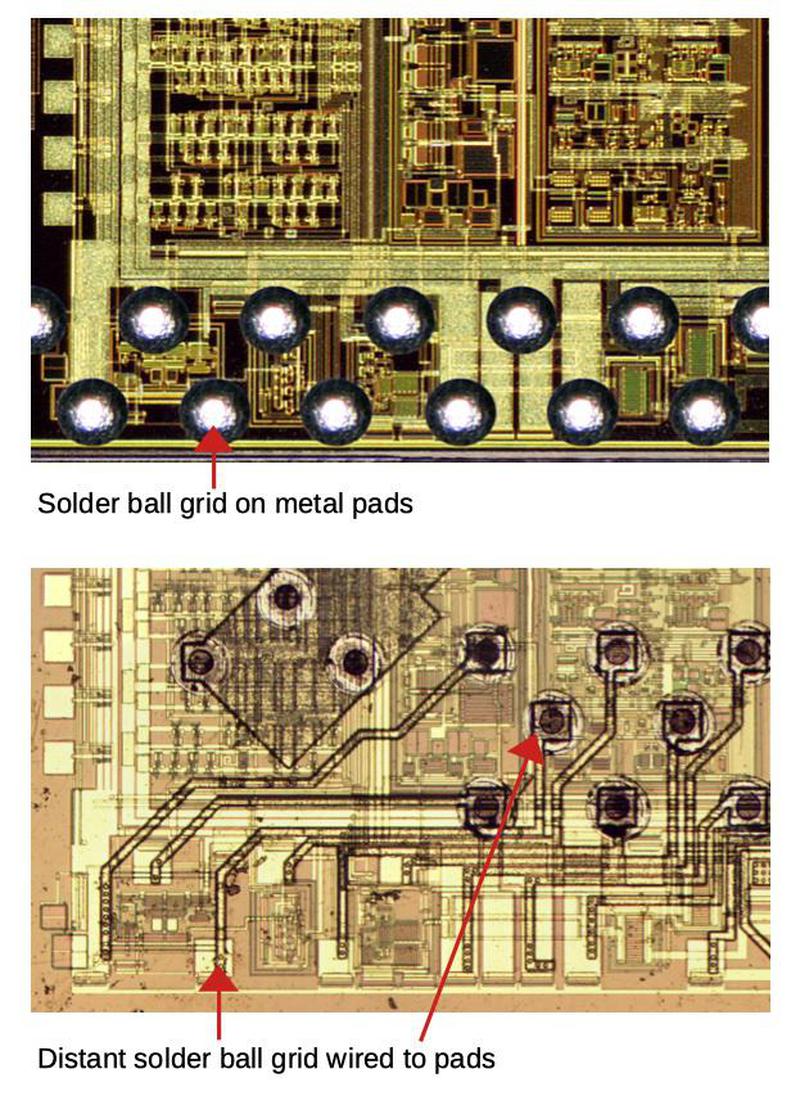

The pattern of solder balls is more visible in Antoine Bercovici's photo below. There are rows three-deep of solder balls along the four sides, as well as rows through the middle of the chip and more in the corners. Roughly speaking, the solder balls around the edges are for signals, while the solder balls in the middle distribute power and ground. Note the tangled metal wiring on top of the chip that connects the solder balls to the underlying circuitry.4

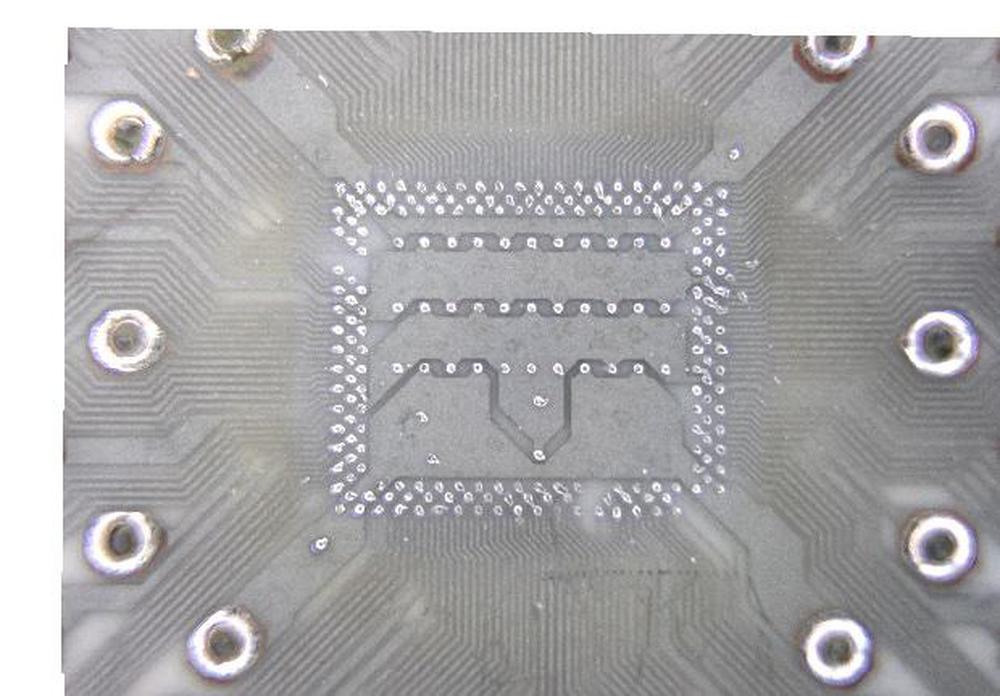

The photo below shows a closeup of the ceramic substrate that holds the die; compare the pattern to the die above.5 The die was soldered to the rectangular array of contacts in the middle, while the large circles around the edge of the photo are the pins of the chip. Note the dense, complex wiring pattern between the pins and the tiny contacts. The wiring traces are extremely thin (about 30µm), with thicker traces from power and ground. The contacts form a complex pattern. most are in a rectangular array, three deep. However, there are also rows of contacts through the center of the chip, connected alternately to power and ground by the thick traces inside the rectangle, and a few scattered contacts. The contact pattern on the substrate was optimized for the layout of this particular chip. Power distribution was a particular concern.

It's interesting to consider the hierarchy of connections between the coarse 0.1" grid of the chip's pins and the tiny 1µm features on the chip. At the top level, the pin spacing is 0.1" in a 14×14 grid. The solder balls have a spacing of 0.01", so the ceramic substrate reduces the spacing by a factor of 10. The solder balls are connected to the wiring on top of the die, spaced at 0.001", increasing the density by another factor of 10. The top wiring is connected to the underlying wiring on the chip, with a spacing of 0.0001", another factor of 10. Finally, the feature size on the die is about 1µm, another factor of 2.

With this type of packaging, you can visualize the die position by looking at the underside of the IC (below). Because the chip is soldered directly to the substrate, there are no pins where the chip is attached. Thus, the spot with no pins indicates the position of the die.

Inside the chip

The die photo below shows the chip with most of the metal layers dissolved, making the transistor structure underneath visible. The chip has three main components: a 16-bit microprocessor CPU, an analog front end for the network signals, and 24,000 logic gates for the main functionality. The chip also has some buffer RAM at the left, and I/O drivers in the middle and bottom. (IBM originally implemented the token ring interface with six analog and digital chips. To decrease cost, they put all the functionality onto a single chip, resulting in the combination of analog and digital circuitry.)

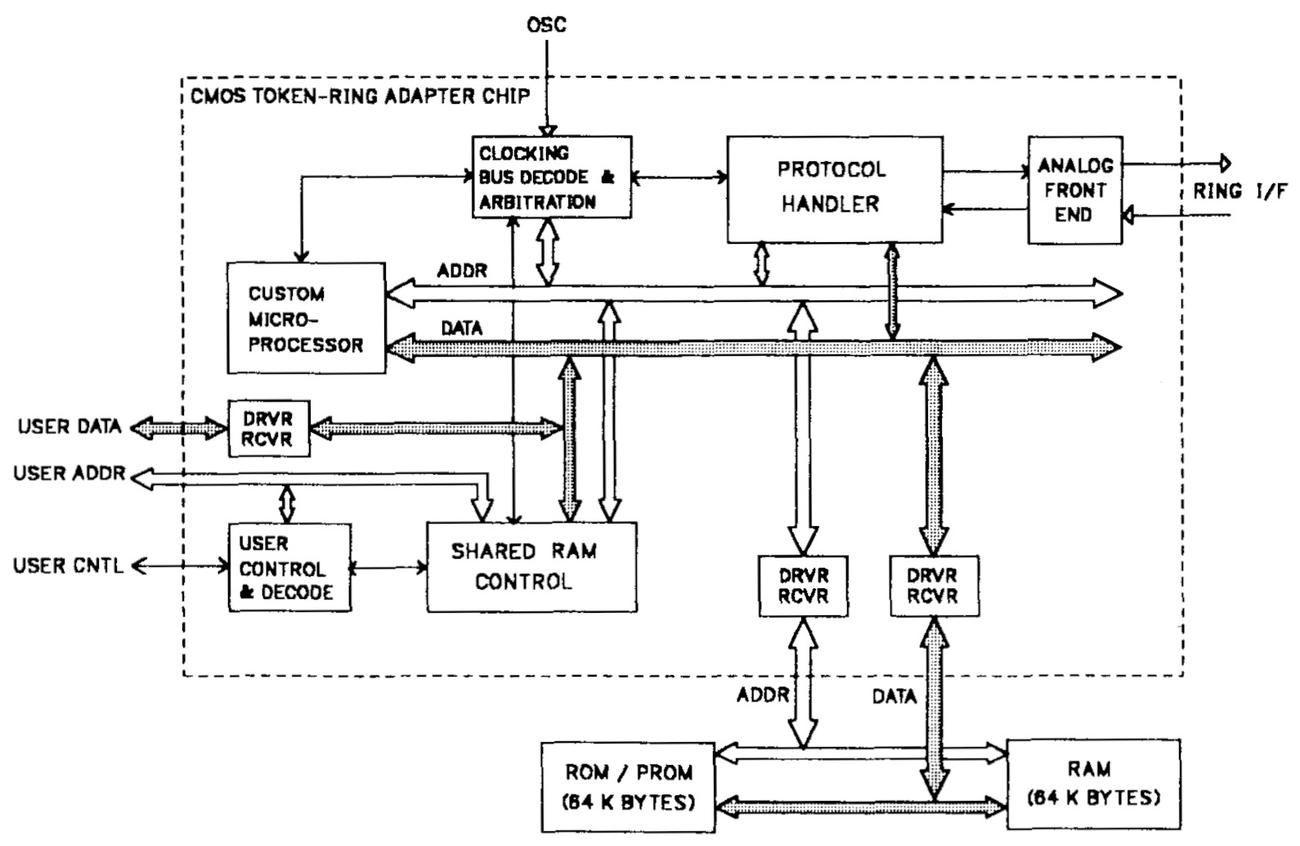

The block diagram below shows the complex functionality of the chip. Starting in the upper right, the analog front end circuitry communicates with the ring. The analog front end extracts the clock and data from the network signals. The protocol handler implements the low-level token ring protocol: it decodes data, breaks packets into frames and performs error checking. Network data is moved between on-chip buffers and the external RAM by the shared RAM control. Finally, a custom 16-bit microprocessor implements the data link layer protocols and controls the chip.

Standard-cell logic

The chip's logic is implemented with a CMOS standard cell library and consists of about 24,000 gates. The idea of standard-cell logic is that each function (such as a NAND gate or latch) has a standard layout. These cells can then be combined by automated design tools to create the desired logic. (This is in contrast to older methodologies, where the designer would lay out each transistor individually, either on paper or using design software.) Standard cells make chip design much easier, since software can do the circuit synthesis, layout, and routing, However, the design isn't as flexible or optimized as a fully-custom circuit.

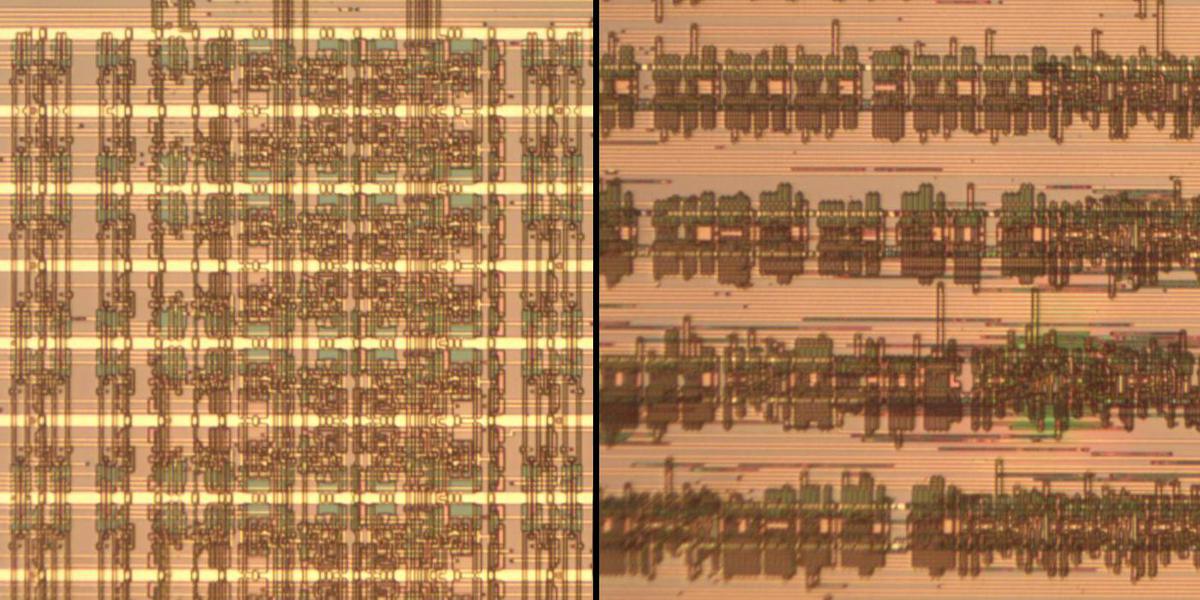

The standard cell layout is visible on the chip, with the cells arranged in uniform rows, connected by horizontal and vertical wiring. The diagram below magnifies the die to zoom in on five rows of standard-cell logic, and then a single row, to show how small the cells are on the die.

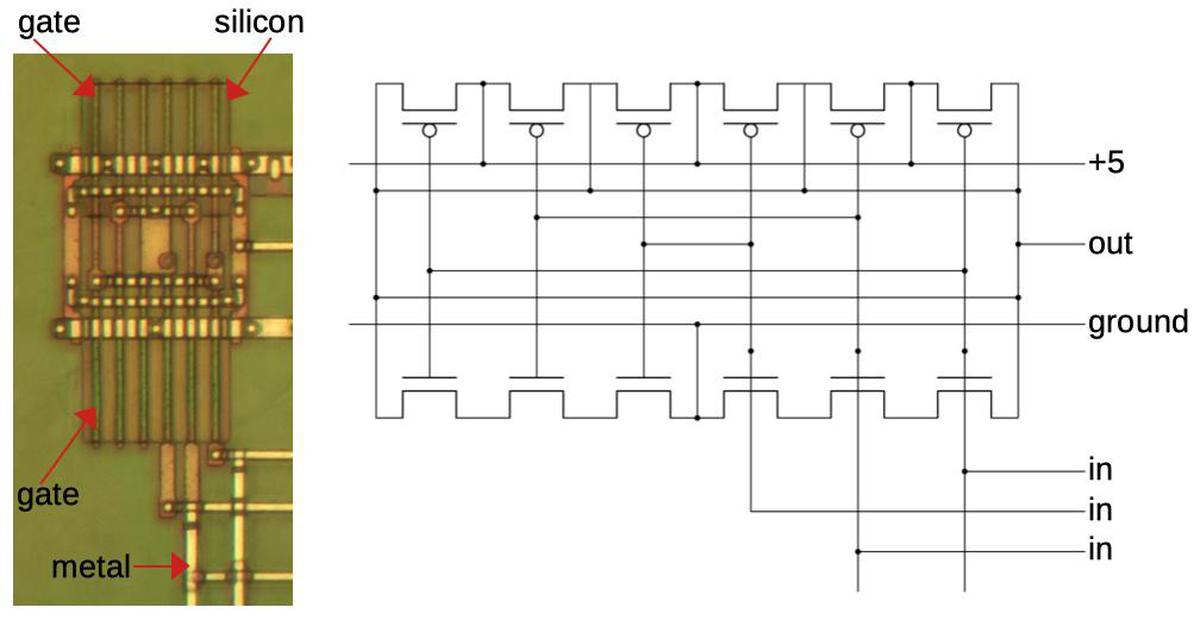

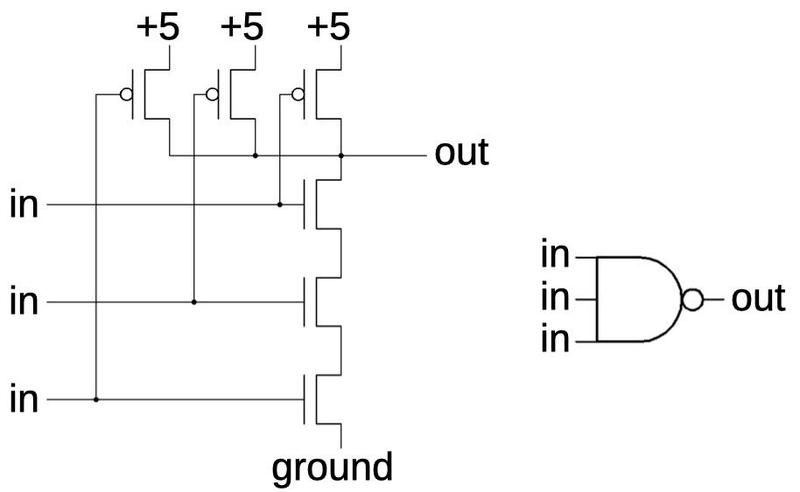

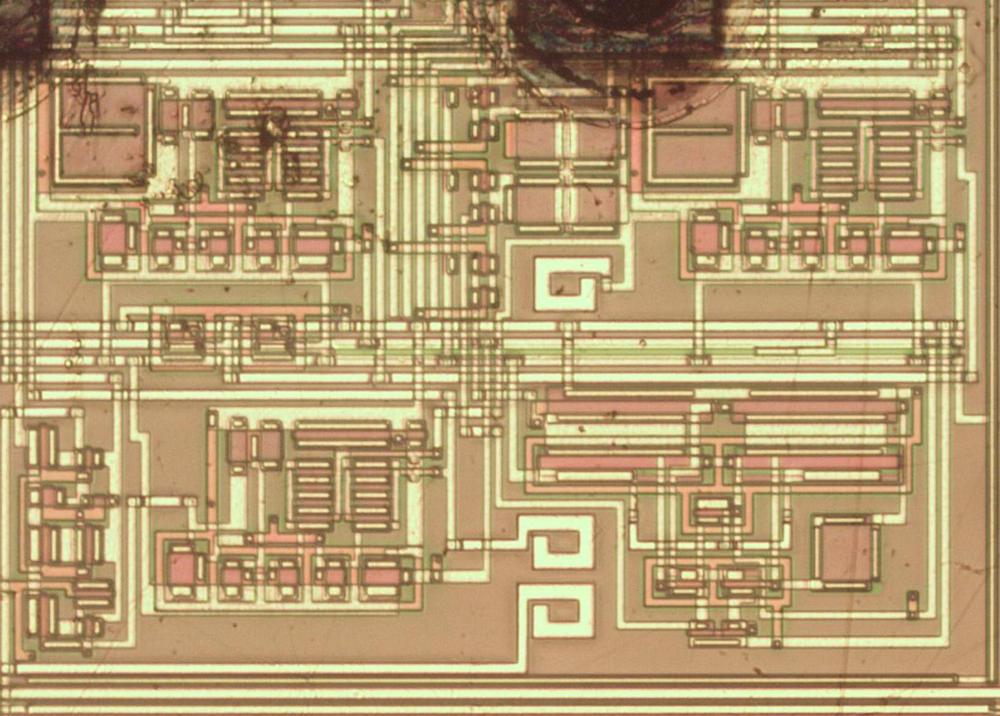

The standard cell below implements a 3-input NAND gate, and I'll explain how it is constructed.6 There are 6 PMOS transistors on top and 6 NMOS transistors on the bottom. The transistors are formed from a region of doped silicon at the top and another at the bottom. Vertical lines of polysilicon, a special type of silicon, form the transistor gates. Polysilicon is also used for vertical wiring inside the cell. The chip has three layers of metal: the bottom layer is used for horizontal wiring, the middle layer is used for vertical wiring, and the top layer connects to the solder balls. Horizontal metal wiring connects the transistors inside the cell and connects the cell to other cells. The two thick horizontal metal wires provide power and ground for the cell. The second, vertical metal layer provides vertical wiring across and between cells. This layer also implements the power connections between the solder balls and the horizontal power wiring visible here. The round dots are connections between layers (silicon, polysilicon, or metal). The schematic on the right matches the layout of the cell.

In the schematic below, I've removed the redundant transistors and rearranged the layout to make the NAND circuit more clear. If all inputs are 1, the NMOS transistors at the bottom turn on, pulling the output low. If any input is 0, a PMOS transistor turns on, pulling the output high. Thus, the circuit implements a NAND gate.

To summarize, standard-cell logic provides a convenient, automated way of implementing logic. A small number of standardized cells implement the basic logic functions. These cells are arranged in rows and wired together to create the desired logic. (From the teardown perspective, standard-cell logic is somewhat disappointing, since the high-level structure is not visible; it's just a bunch of uniform cells.)

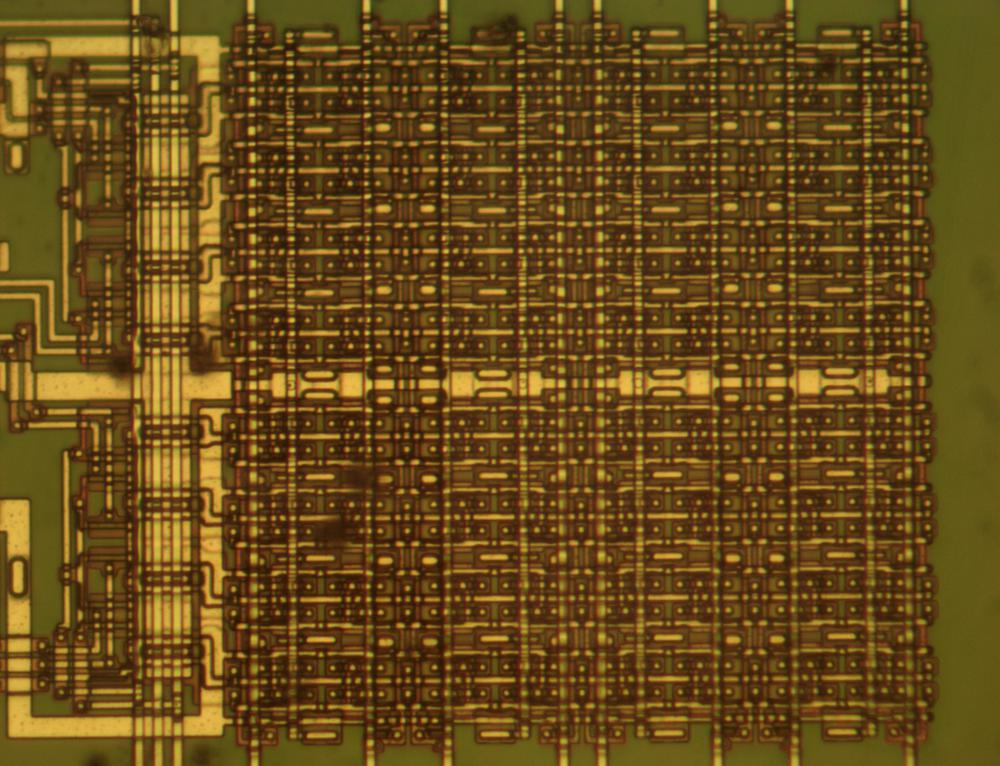

The logic circuitry includes some static RAM buffers to hold network data. These were custom-implemented (as were the I/O drivers) instead of using standard cells. The photo below shows a block of RAM cells.

Inside the CPU

The chip contains a 16-bit CMOS control microprocessor that was custom-designed by IBM7 and contains about 10,000 gates. This processor handles the network protocol, controls transmit and receive operations, and manages the shared memory. It runs at 5.34 megahertz and performs about 3 MIPS (million instructions per second). The microprocessor runs code from an EPROM on the board. IBM calls this "microcode", but it's unclear if this is microcode in the usual sense or just firmware instructions.

The CPU is built with standard-cell logic (except for the RAM and ALU), but curiously the cell layout is entirely different from the rest of the chip, presumably because it had different designers. The photo below compares the CPU's logic (left) with the other logic (right). The CPU fits 7 rows of logic in the same vertical space that holds 4 rows of the regular logic. On the other hand, the logic on the right appears to be much dense horizontally.

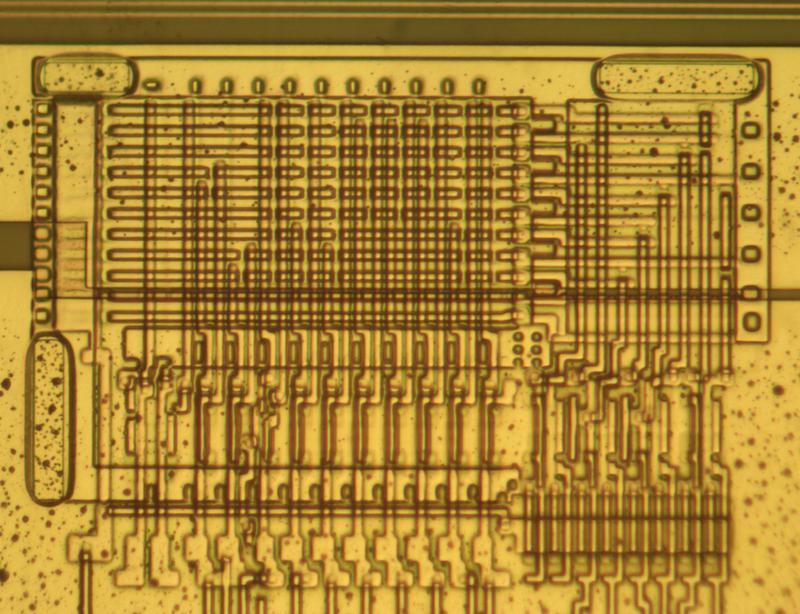

One design feature of the CPU that's visible on the die is its use of multiple PLAs (programmable logic arrays) for instruction decode and control. (Looking at the photo, I count nine small PLAs and a large PLA in the corner.) A PLA provides a structured and dense way of implementing logic (typically AND-OR logic). More importantly, PLAs also provided flexibility and the ability to easily change the design. In the PLA below, 12 signals enter at the lower left. The matrix above converts these to 11 signals that pass to the right. The second matrix generates 8 outputs. The contents of the PLA are visible as the pattern in the metal layer. Since the PLA could be modified by changing the chip's metal layer, bug fixes could even be done after the silicon had been etched.

The CPU contains memory cells for register storage (which they call a 16×16 cache). This RAM design is different from the RAM design in the logic circuitry.

Analog circuitry

The chip contains a block of analog circuitry implemented in CMOS. This circuitry "performs signal conversion and clock recovery functions as well as detecting and compensating for line impairments". This circuitry includes resistors, capacitors, MOS transistors with special properties, and other components.8 The analog block uses a variety of circuits such as op-amps, switched-capacitor amplifiers, voltage references, peak detectors, a charge pump, voltage-controlled-oscillator, and phase-locked loop.

One challenge in the design was to minimize "jitter" in the clock signal extracted from the network data. Because each node retransmitted the data, jitter would accumulate as a packet traversed the ring, so each node had to be accurate. They used a variety of techniques to keep noise out of the signal such as providing separate power and ground for the analog circuitry, using differential signals in the circuitry, and keeping logic signals away from the analog circuitry.

The analog circuitry made the chip much more complex to manufacture and test.9 The capacitors and special transistors required special process steps during manufacturing. Manufacturing tolerances were also much tighter since process variations could change the electrical characteristics enough to make the analog circuitry stop performing. Some of the analog circuitry was too sensitive to be tested on the wafer and couldn't be tested until the chip was packaged, making failed chips much more costly. Even so, IBM found it worthwhile to put the analog circuitry on the chip.

Shrinking the chip

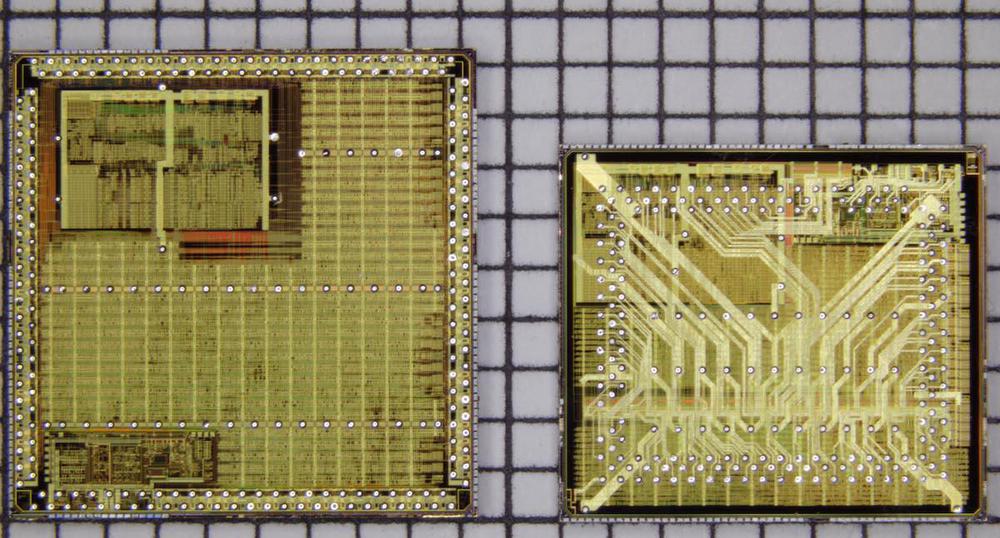

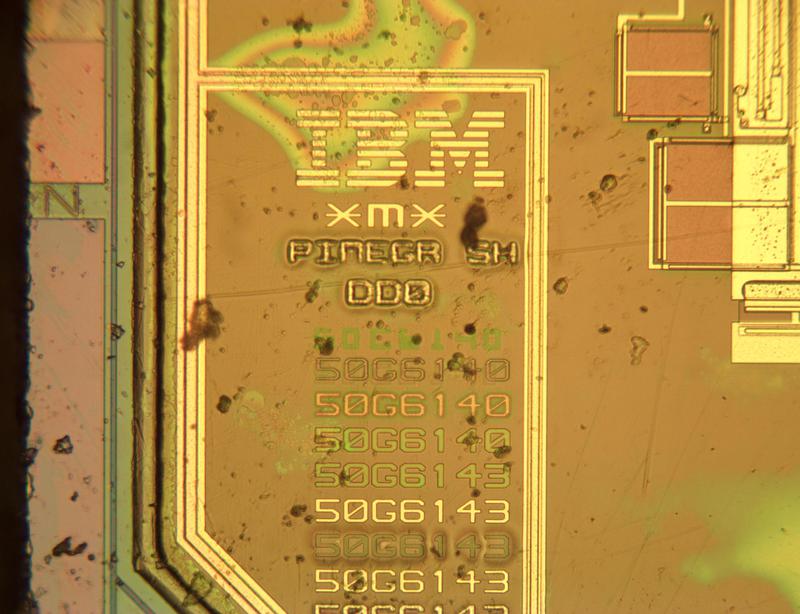

IBM originally made the token ring chip in 1988. The chip I examined is a smaller version from 1994. The photo below compares the two chips on a 1 mm grid; the older, larger chip is on the left. Note that both chips have the same microprocessor block (upper left corner) and the same analog block (lower left / upper right corner). The height of the standard cell logic rows is much smaller in the newer chip, probably how they shrunk the logic. The solder balls on the left connect to the underlying circuitry, while the solder balls on the right are routed all over the chip by a third layer of metal.

The analog section from the old chip was copied to the new chip unchanged, but the connections to solder balls are very different, showing the change in wiring techniques. In the old chip (left), the solder balls are on top of metal pads that are connected to the circuitry. The layout is similar to integrated circuits that use wire bonding and bond pads. In the old chip (right), the solder ball grid is not anchored to the underlying chip architecture, but follows its own constraints. A new layer of metal connects the solder balls to the pads. The pads remain in their atavistic positions, despite being unused in the new chip.

The token ring board

I'll just say a bit about the token ring board that contains this chip. The board is an ISA card from 1994. The IBM chip dominates the board, but there are also numerous other chips, largely 74F-series TTL. There's also a square (and curiously thick) Lattice chip, probably a GAL (Generic Array Logic). A GAL is a programmable logic chip, combining AND/OR logic with flip-flops. A Signetics chip with an IBM label on top is probably a field-programmable logic array (FPLA). Despite all the complexity of the IBM chip, the board requires a lot of programmable logic and simple logic ICs, mostly to interface to the computer's ISA bus. The board has 64 kilobytes of RAM to store network data, two Toshiba TC55329 32K×9 bit static RAM chips. This RAM is accessible both by the network card and by the host PC. The code for the internal microprocessor is contained in an EPROM chip on the board, an AMD 27C1024 chip holding 128 kilobytes as 16-bit words. The EPROM chip has an adhesive label on it with the IBM part number 73G2042, indicating the microcode version.

The right side of the board holds the analog circuitry to interface with the network. Five pulse transformers provide electrical isolation between the interface board and the potentially-dangerous voltages of the network. Two bypass relays disconnect the card from the ring when not in use, preserving the ring's connectivity. There are also two transistor arrays along with resistors and capacitors to condition the network signals before passing them to the token ring chip. The card connects to the network via an RJ-45 connector that can be used with unshielded twisted-pair (UTP) cable. It also has a DB-9 connector on the back that can be used with shielded twisted-pair (STP).11

In the 1980s, many different local area networking standards were competing including Ethernet, Token Ring, Datapoint's ARCnet, Apple's LocalTalk, Omninet, and Econet. By the early 1990s, Ethernet won due to a combination of factors: much lower cost (about 1/5 the cost of Token Ring), less complexity leading to faster technological improvement (such as 100 Mb/s Ethernet and switched Ethernet), and a wider ecosystem than IBM provided.10 The complexity of the chip reflects the complexity of Token Ring and illustrates that IBM's technological edge in the 1980s was a double-edged sword: although it initially gave Token Ring a large performance advantage, the simpler technology of Ethernet eventually won.12

Thanks to Antoine Bercovici for die photos and information. Thanks to my Twitter readers for discussion. I announce my latest blog posts on Twitter, so follow me @kenshirriff. I also have an RSS feed.

Notes and references

-

IBM's token ring network was inspired by ring network research from the 1970s, such as the Cambridge Ring. ↩

-

IBM called their integrated circuits MST, Monolithic System Technology. ↩

-

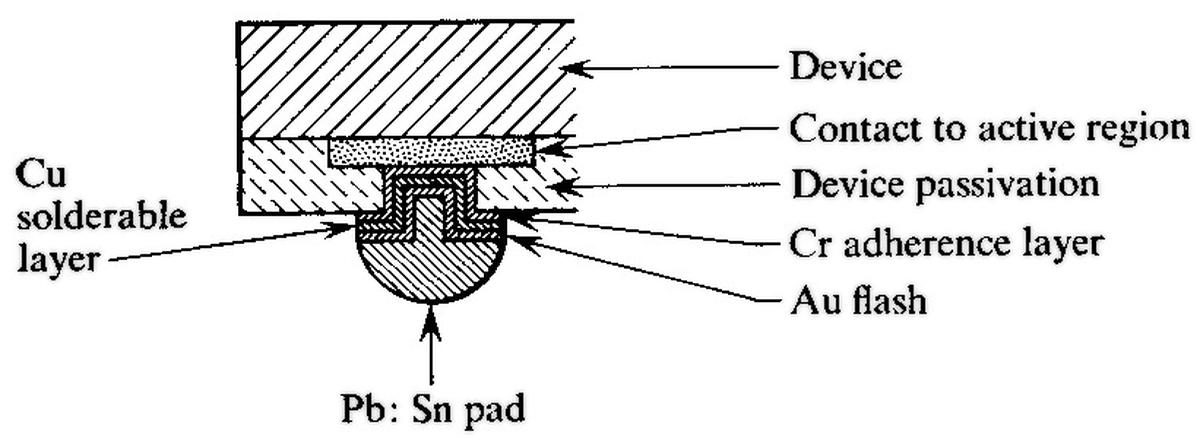

The diagram below illustrates the complex construction of a solder ball on the die. Thin layers of aluminum, chromium, copper, and gold are put on the silicon to obtain the necessary properties, followed by a layer of lead-tin solder, which is reflowed to form the balls. The chromium bonds to the oxide layer, while the copper provides solderability and the gold protects the copper from oxidizing.

Diagram of a solder pad, from this paper. -

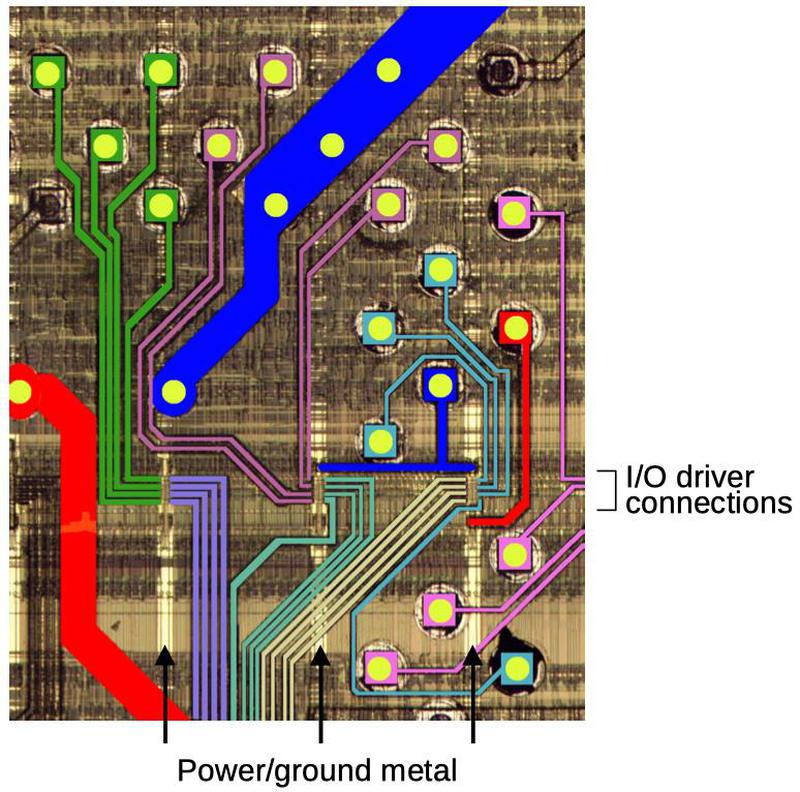

The metal wiring on the top layer of the chip looks like a mess, but there is some structure behind it. The diagram below shows a small section of this wiring, colored to show the structure. The solder balls are shown in yellow. The red and blue traces transmit power and ground from the solder balls across the chip. These traces connect with the vertical strips of metal wiring that transmit power and ground throughout the chip. The other wiring connects the signal solder balls to the I/O drivers, converging in a narrow band in groups of four. Most of the solder balls are positioned with little regard for the underlying circuitry; the top metal layer provides the "glue" between them and the integrated circuit itself. The result is the peculiar metal pattern visible on top of the chip.

The colored lines show how the top layer of metal wiring connects the solder balls to the chip.In most integrated circuits, the I/O drivers are around the edges of the chip next to the bond pads. However, in this chip, most of the I/O drivers stretch in a line across the middle of the chip (indicated above). More I/O drivers are at the bottom of the chip next to the CPU, probably connected to it directly.

The photo below shows three I/O drivers, side by side. The metal layers have been mostly removed to reveal the silicon underneath. These drivers are fairly complex. The top half contains large drive transistors to provide relatively high-current outputs, along with smaller control transistors. The lower half contains reddish serpentine resistors made out of polysilicon. These resistors help protect the sensitive gates of the input transistors from static discharges. For output pins, these resistors are disconnected. The middle resistor, however, is connected to the input transistor near the bottom.

Die photo of three I/O drivers. -

The die is flipped over when soldered to the substrate. This needs to be kept in mind when comparing the die and the substrate. For instance, the two extra power connections for the CPU are in the lower right of the die but the lower left of the substrate. (Just a note to avoid potential confusion.) ↩

-

I'm not sure which transistors are NMOS and which are PMOS in the gate. I'm assuming the PMOS are on top and it's a NAND gate, but it could be the other way around, in which case it's a NOR gate. ↩

-

The processor is described as using IBM's "universal controller (UC) architecture" but there's very little information about this architecture. Wikipedia claims this architecture consisted of UC0 (8-bit), UC.5 (16-bit), and U1 (32-bit), with upwards compatibility. An alt.folklore.computers thread and this page provide a bit more information. ↩

-

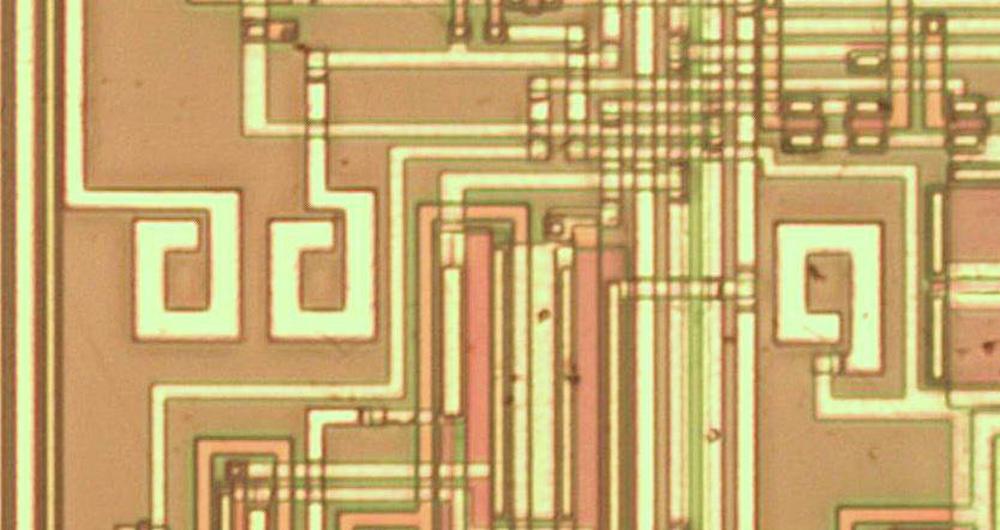

The analog circuitry contains small loops of various sizes that I was unable to identify. They are only connected on one end and have nothing underneath, so they don't seem to be inductors. Twitter readers suggested probe points, disconnected circuitry, or reflective delay lines, but their function remains unclear.

Three of the loops on the die. -

The designers were very proud of the testability of the chip, writing a paper about the testing methodology, and a second paper about testing the analog circuitry. The chip includes a boundary scan feature (kind of like JTAG) and built-in self-test features, as well as mechanisms to isolate the analog block and the CPU for separate testing. ↩

-

Much of the information about this chip comes from A 16-Mbit/s adapter chip for the IBM token-ring local area network. That article describes an earlier version of the chip, so I can't be sure everything is accurate when applied to this chip. (It appears to me that the chips are the same apart from the smaller size of the newer chip.) One source says the two chips are compatible. The older chip has part number 51F1439 while the chip I examined is 50G6144.

For information on Token Ring, the book The Triumph of Ethernet: Technological Communities and the Battle for the LAN Standard discusses the competition between network protocols in great detail. You might also like Foone's Twitter thread on Token Ring. Interestingly, one of the original "ENIAC Women", Jean Bartik, wrote a 1984 article on Token Rings—"IBM's Token Ring: Have the Pieces Finally Come Together?"—but unfortunately I haven't been able to locate a copy. ↩

-

Token Ring cables could be joined using the "IBM Data Connector", a curious type of connector. The connectors are known as hermaphroditic because two connectors can be joined without worrying about male and female ends. The connectors were nicknamed "Boy George" connectors after the androgynous singer, which seems questionable by current standards. (The nickname may also be motivated by the BOGR text on the connector, which I think indicates the black, orange, green, and red wires.)

IBM Data Connector. Photo from Redgrittybrick, (CC BY-SA 3.0). -

The book The Innovator's Dilemma describes how a low-end but innovating technology can defeat an advanced, entrenched technology. I haven't investigated Token Ring versus Ethernet enough to be sure this model applies, so consider it a hypothesis. ↩

8 comments:

I worked as a summer helpdesk intern at IBM's Yorktown research center when I was in college, between 1989 and 1991. The whole building relied heavily on Token Ring at that point, primarily for connectivity between desktop PCs (the Model 70 and 80 microchannel PS/2s were the in thing at the time) and the center's 3070 mainframes. A surprising amount of work there took place using the 3070's, and only some with PC-based software, many of them were just acting as 3270 terminal emulators.

In any event, connecting these PCs to the Token Ring was a remarkably tedious and hardware intensive affair, requiring a large external relay box that could handle attaching up to two PCs onto the ring, and loads of those incredibly bulky BOGR cables. The relay boxes and cables were both supplied by the Onan corporation, better known for generators than computer cabling, but the name seems mildly ironic (biblically speaking) given the Boy George moniker.

The network was down frequently with faults occurring in local sub-rings on a regular basis, and often blamed on a station "beaconing", whatever that meant, probably incorrectly connected in some fashion, and taking down the rest of the associated ring with it. At least with star/switch topology, a single bad port doesn't usually kill a whole chunk of customers anymore.

I'll comment from the point of view of a former Point of Sale tech who worked with Token ring extensively. It was robust until someone pulled the #1 cable from a register breaking the ring, unless they had a relay that closed keeping the ring unbroken. One major negative came when I worked on a migration from token ring to ethernet which was only one device can have the token. What this meant is when registers had to down load their software after a reboot (IPL in IBM parlance) only one could download at a time with Token ring, the other registers would wait. With ethernet I could reload 13 registers at a time

@Andy, the problems you experienced were a direct result of the Onan box. That product was what caused the beaconing. Beaconing was a condition in which the ring was broken, due to a faulty relay in the Onan box most likely. When a station on the ring detected that the ring was broken, by not receiving a token in a specified number of milliseconds, the station would transmit a beacon packet several times per second. If a station received its own beacon back, then it would stop beaconing and normal operation would resume. If a station received a beacon sourced from a different MAC address, it would stop beaconing and repeat the beacon from the upstream station. What this did was quickly (in less than a second usually) find the station that was causing the ring to be broken. The beacon packet contained the MAC address of the most recent upstream neighbor and thus allowed the problem to be pinpointed. Token ring was in fact star wired if you used IBM 8228 and 8230 access units. The Onan box was a kludge.

You are correct that switched networks don't have this problem....but switched Ethernet (and Token Ring) were not common until 1994, invented by a little company called Kalpana. In fact low cost switched Ethernet is was eventually killed Token Ring. Before switching, Ethernet hubs and collision based transmission were far more difficult to troubleshoot than Token Ring. If a single Ethernet station unplugged without terminating the cable correctly, the whole LAN would go down, and there would be no way to troubleshoot other than walking the length of the whole cable from end to end.

Also, the "incredibly bulky" cable used in Token Ring also had a purpose. Besides being androgynous, it would loopback in the cable end. Thus before entering the ring, each station could test the continuity of the cable. This prevented stations with bad wires from breaking the ring. Token Ring was far more advanced that the average person would imagine. There is a reason most telcos run their networks as metro rings today. IBM Token Ring was very expensive, but far more reliable than other options of the day.

Fascinating to stumble across this site and read about what I worked on right out of college in 1985. I hired into IBM NHD's token-ring organization in Research Triangle Park, NC (retiring next month). I designed cards based on the same chipset you tore down here glued to different bus interfaces for other IBM products, like the 3174 display controller and 3725 communications controller.

I later transitioned to Product Engineering as we called it back then, supporting product in the field. I distinctly remember visiting more than one client in a "crit sit" to troubleshoot on-site, and in at least two incidents, isolating the root cause down to bad design in 3rd party concentrators... names like Onan and Waters come to mind, but not totally sure.

It's funny how now after all these years, in the battle between the nets, neither of them "won," as they both got beat out by WIFI!

I worked as an EE in the Comms and Networking design group at Data General through 1992. I designed a VME Token Ring interface card for the 88000 machines DG was planning to produce (until Motorola discontinued the processor). I later worked for 3Com doing more Token Ring design work.

While technically interesting, Token Ring was a shared media nightmare, even more so than Ethernet. The stiff, proprietary connection cables were a huge hassle. They took up LOTS of space, and weren't any more reliable (connectors and cables are always the least reliable part of any system) than Ethernet with its vampire taps and equally stiff AUI cables (and their woefully inadequate "slide latch" retention).

Our Token Ring boards were about 3x more expensive than our Ethernet boards in parts cost. Ethernet chips "just worked", Token Ring boards had microcode that needed to be loaded and licensed. When we moved to CAT3 cable and switched connections, the connector issue was pretty much solved, but the 16 megabit TR switches were a nightmare to get through radiated EMI testing, thanks to TR's strict signal edge requirements. As soon as 100 megabit Ethernet came on the scene, Token Ring was done for. Simpler, cheaper and faster.

Token Ring went from an amazing marketing exercise that was too complex to be reliable in many real networks (it was OK if super carefully managed using good equipment), to the ridiculous.

I refer to Full Duplex Token Ring. Which was more descriptively called Full Duplex Token-less Ring-less Token Ring. Each "ring" was a FD point to point link between say a PC and a switch and so there was no contention and no need for tokens or rings. :-) :-) That was the end for TR.

Apollo Computers also used a Token Ring. At that time, it was at lower cost and much higher performance than Ethernet. Used just BNC cabling. Fun fact: A single workstation without shortcutting in and out of the ring, won't boot. But paperclips would do...

Another important aspect between the earlier "Spyglass" and "Pinegrove"/"Pinegrove Shrink" is that the "Spyglass" needed a microchannel bus interface chip (25F7355) for the changes for the adaptation to PS/2s, whereas the "Pinegrove" and later ASICs incorporated that on board. The same pin arrangement was used between the two designs, but there were those differences, also shrinking the size of the adapter. See/search the 'Ardent-Tool' website for reference.

Post a Comment