Addition is harder than you'd expect, at least for a computer. Computers use multiple types of adder circuits with different tradeoffs of size versus speed. In this article, I reverse-engineer an 8-bit adder in the Pentium's floating point unit. This adder turns out to be a carry-lookahead adder, in particular, a type known as "Kogge-Stone."1 In this article, I'll explain how a carry-lookahead adder works and I'll show how the Pentium implemented it. Warning: lots of Boolean logic ahead.

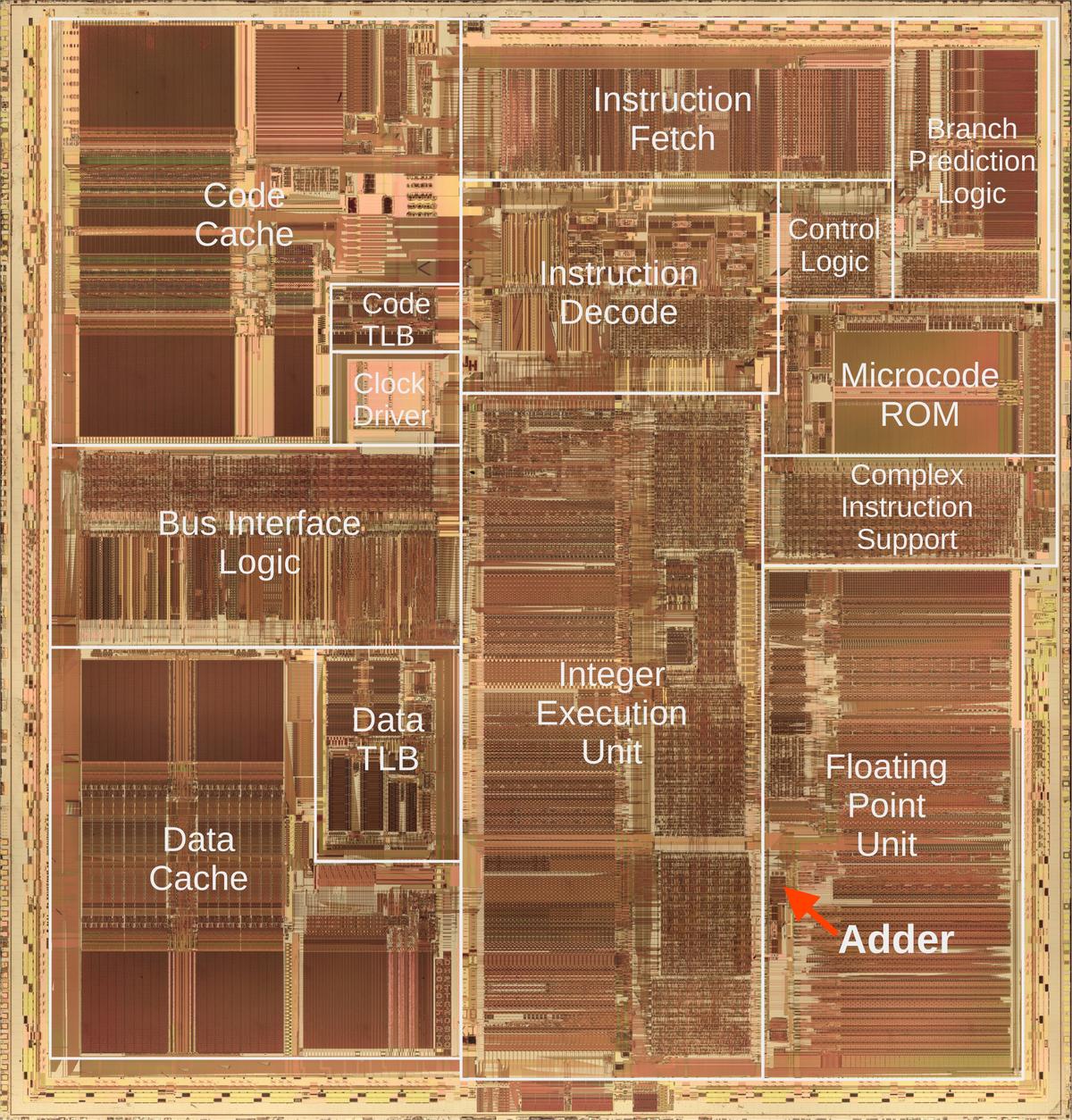

The die photo above shows the main functional units of the Pentium. The adder, in the lower right, is a small component of the floating point unit. It is not a general-purpose adder, but is used only for determining quotient digits during division. It played a role in the famous Pentium FDIV division bug, which I wrote about here.

The hardware implementation

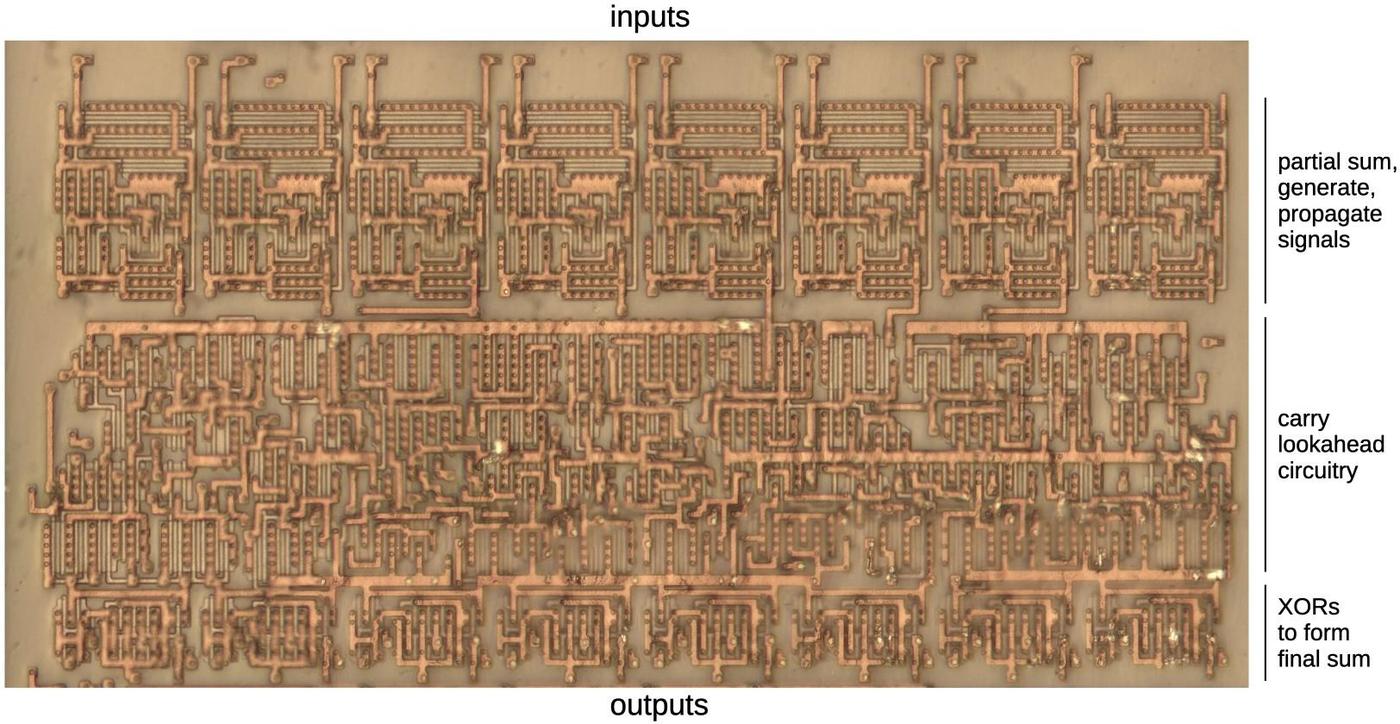

The photo below shows the carry-lookahead adder used by the divider. The adder itself consists of the circuitry highlighted in red. At the top, logic gates compute signals in parallel for each of the 8 pairs of inputs: partial sum, carry generate, and carry propagate. Next, the complex carry-lookahead logic determines in parallel if there will be a carry at each position. Finally, XOR gates apply the carry to each bit. Note that the sum/generate/propagate circuitry consists of 8 repeated blocks, and the same with the carry XOR circuitry. The carry lookahead circuitry, however, doesn't have any visible structure since it is different for each bit.2

The large amount of circuitry in the middle is used for testing; see the footnote.3 At the bottom, the drivers amplify control signals for various parts of the circuit.

The carry-lookahead adder concept

The problem with addition is that carries make addition slow. Consider calculating 99999+1 by hand. You'll start with 9+1=10, then carry the one, generating another carry, which generates another carry, and so forth, until you go through all the digits. Computer addition has the same problem: If you're adding two numbers, the low-order bits can generate a carry that then propagates through all the bits. An adder that works this way—known as a ripple carry adder—will be slow because the carry has to ripple through all the bits. As a result, CPUs use special circuits to make addition faster.

One solution is the carry-lookahead adder. In this adder, all the carry bits are computed in parallel, before computing the sums. Then, the sum bits can be computed in parallel, using the carry bits. As a result, the addition can be completed quickly, without waiting for the carries to ripple through the entire sum.

It may seem impossible to compute the carries without computing the sum first, but there's a way to do it.

For each bit position, you determine signals called "carry generate" and "carry propagate".

These signals can then be used to determine all the carries in parallel.

The generate signal indicates that the position generates a carry. For instance, if you add binary

1xx and 1xx (where x is an arbitrary bit), a carry will be generated from the top bit,

regardless of the unspecified bits.

On the other hand, adding 0xx and 0xx will never produce a carry.

Thus, the generate signal is produced for the first case but not the second.

But what about 1xx plus 0xx? We might get a carry, for instance, 111+001, but we might not get a carry,

for instance, 101+001. In this "maybe" case, we set the carry propagate signal, indicating that a carry into the

position will get propagated out of the position. For example, if there is a carry out of

the middle position, 1xx+0xx will have a carry from the top bit. But if there is no carry out of the middle position, then

there will not be a carry from the top bit. In other words, the propagate signal indicates that a carry into the top bit will be propagated out of the top

bit.

To summarize, adding 1+1 will generate a carry. Adding 0+1 or 1+0 will propagate a

carry.

Thus, the generate signal is formed at each position by Gn = An·Bn, where A and B are the inputs.

The propagate signal is Pn = An+Bn,

the logical-OR of the inputs.4

Now that the propagate and generate signals are defined, they can be used to compute the carry Cn at

each bit position:

C1 = G0: a carry into bit 1 occurs if a carry is generated from bit 0.

C2 = G1 + G0P1: A carry into bit 2 occur if bit 1 generates a carry or bit 1 propagates a carry from bit 0.

C3 = G2 + G1P2 + G0P1P2: A carry into bit 3 occurs if bit 2 generates a carry, or bit 2 propagates a carry generated from bit 1, or bits 2 and 1 propagate a carry generated from bit 0.

C4 = G3 + G2P3 + G1P2P3 + G0P1P2P3: A carry into bit 4 occurs if a carry is generated from bit 3, 2, 1, or 0 along with the necessary propagate signals.

... and so forth, getting more complicated with each bit ...

The important thing about these equations is that they can be computed in parallel, without waiting for a carry to ripple through each position. Once each carry is computed, the sum bits can be computed in parallel: Sn = An ⊕ Bn ⊕ Cn. In other words, the two input bits and the computed carry are combined with exclusive-or.

Implementing carry lookahead with a parallel prefix adder

The straightforward way to implement carry lookahead is to directly implement the equations above. However, this approach requires a lot of circuitry due to the complicated equations. Moreover, it needs gates with many inputs, which are slow for electrical reasons.5

The Pentium's adder implements the carry lookahead in a different way, called the "parallel prefix adder."7 The idea is to produce the propagate and generate signals across ranges of bits, not just single bits as before. For instance, the propagate signal P32 indicates that a carry in to bit 2 would be propagated out of bit 3. And G30 indicates that bits 3 to 0 generate a carry out of bit 3.

Using some mathematical tricks,6 you can take the P and G values for two smaller ranges and merge them into the P and G values for the combined range. For instance, you can start with the P and G values for bits 0 and 1, and produce P10 and G10. These could be merged with P32 and G32 to produce P30 and G30, indicating if a carry is propagated across bits 3-0 or generated by bits 3-0. Note that Gn0 is the carry-lookahead value we need for bit n, so producing these G values gives the results that we need from the carry-lookahead implementation.

This merging process is more efficient than the "brute force" implementation of the carry-lookahead logic since logic subexpressions can be reused. This merging process can be implemented in many ways, including Kogge-Stone, Brent-Kung, and Ladner-Fischer. The different algorithms have different tradeoffs of performance versus circuit area. In the next section, I'll show how the Pentium implements the Kogge-Stone algorithm.

The Pentium's implementation of the carry-lookahead adder

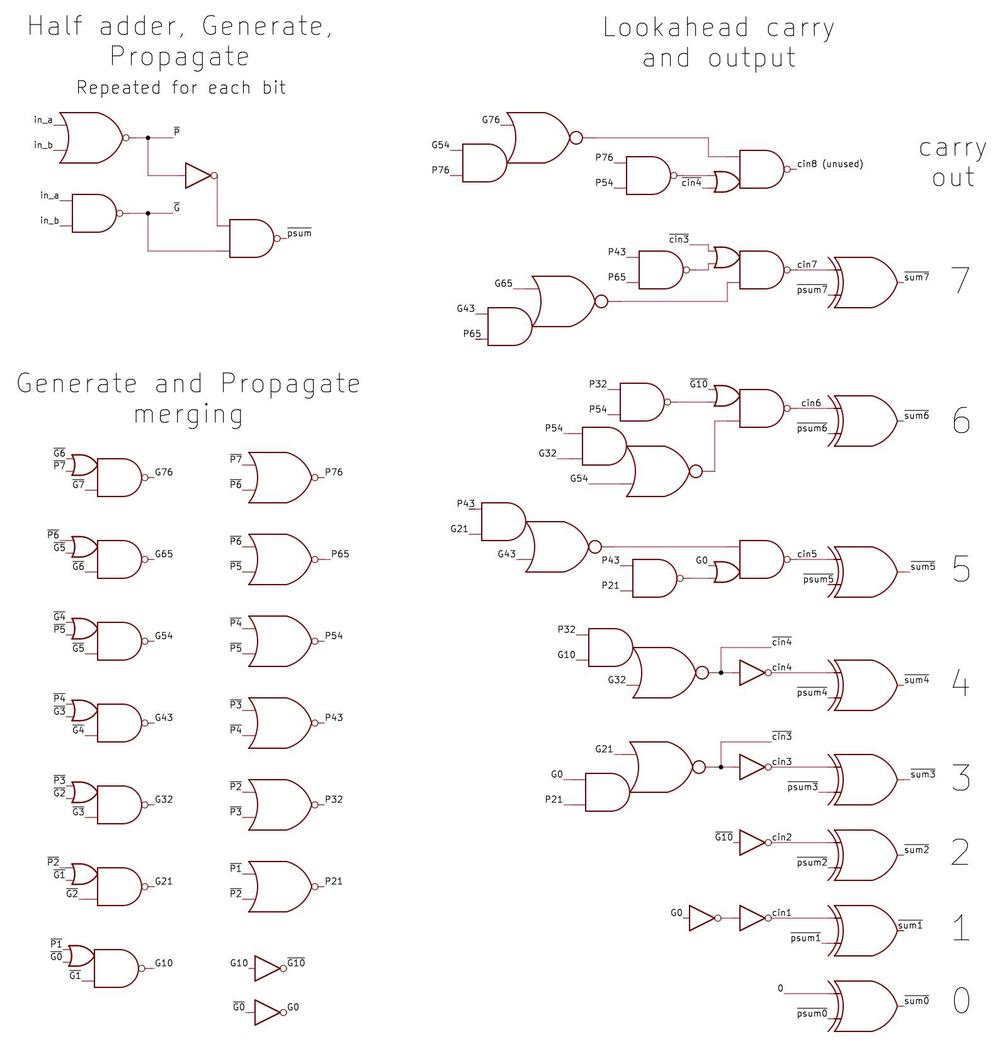

The Pentium's adder is implemented with four layers of circuitry. The first layer produces the propagate and generate signals (P and G) for each bit, along with a partial sum (the sum without any carries). The second layer merges pairs of neighboring P and G values, producing, for instance G65 and P21. The third layer generates the carry-lookahead bits by merging previous P and G values. This layer is complicated because it has different circuitry for each bit. Finally, the fourth layer applies the carry bits to the partial sum, producing the final arithmetic sum.

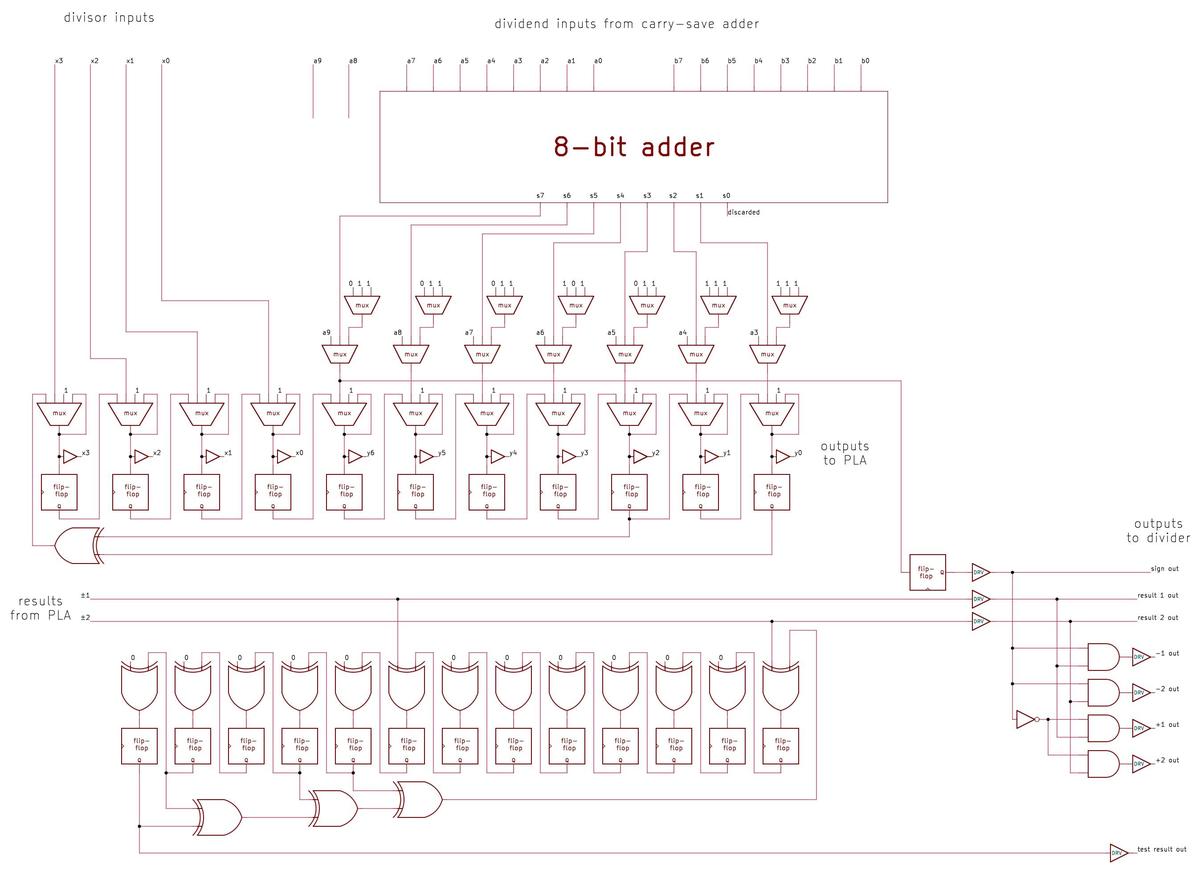

Here is the schematic of the adder, from my reverse engineering. The circuit in the upper left is repeated 8 times to produce the propagate, generate, and partial sum for each bit. This corresponds to the first layer of logic. At the left are the circuits to merge the generate and propagate signals across pairs of bits. These circuits are the second layer of logic.

The circuitry at the right is the interesting part—it computes the carries in parallel and then computes the final sum bits using XOR. This corresponds to the third and fourth layers of circuitry respectively. The circuitry gets more complicated going from bottom to top as the bit position increases.

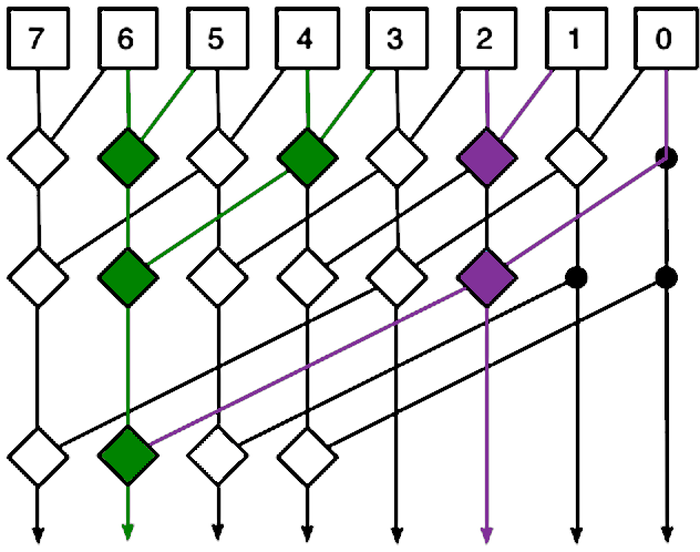

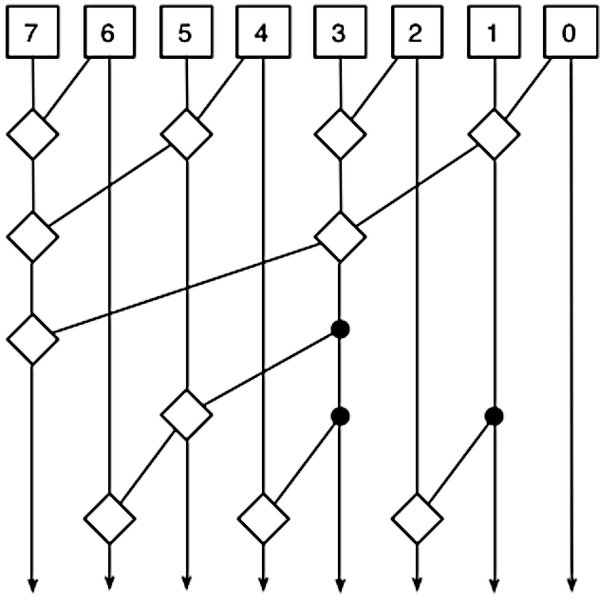

The diagram below is the standard diagram that illustrates how a Kogge-Stone adder works. It's rather abstract, but I'll try to explain it. The diagram shows how the P and G signals are merged to produce each output at the bottom. Each line coresponds to both the P and the G signal. Each square box generates the P and G signals for that bit. (Confusingly, the vertical and diagonal lines have the same meaning, indicating inputs going into a diamond and outputs coming out of a diamond.) Each diamond combines two ranges of P and G signals to generate new P and G signals for the combined range. Thus, the signals cover wider ranges as they progress downward, ending with the Gn0 signals that are the outputs.

It may be easier to understand the diagram by starting with the outputs. I've highlighted two circuits: The purple circuit computes the carry into bit 3 (out of bit 2), while the green circuit computes the carry into bit 7 (out of bit 6). Following the purple output upward, note that it forms a tree reaching bits 2, 1, and 0, so it generates the carry based on these bits, as desired. In more detail, the upper purple diamond combines the P and G signals for bits 2 and 1, generating P21 and G21. The lower purple diamond merges in P0 and G0 to create P20 and G20. Signal G20 indicates of bits 2 through 0 generate a carry; this is the desired carry value into bit 3.

Now, look at the green output and see how it forms a tree going upward, combining bits 6 through 0. Notice how it takes advantage of the purple carry output, reducing the circuitry required. It also uses P65, P43, and the corresponding G signals. Comparing with the earlier schematic shows how the diagram corresponds to the schematic, but abstracts out the details of the gates.

Comparing the diagram to the schematic, each square box corresponds to to the circuit in the upper left of the schematic that generates P and G, the first layer of circuitry. The first row of diamonds corresponds to the pairwise combination circuitry on the left of the schematic, the second layer of circuitry. The remaining diamonds correspond to the circuitry on the right of the schematic, with each column corresponding to a bit, the third layer of circuitry. (The diagram ignores the final XOR step, the fourth layer of circuitry.)

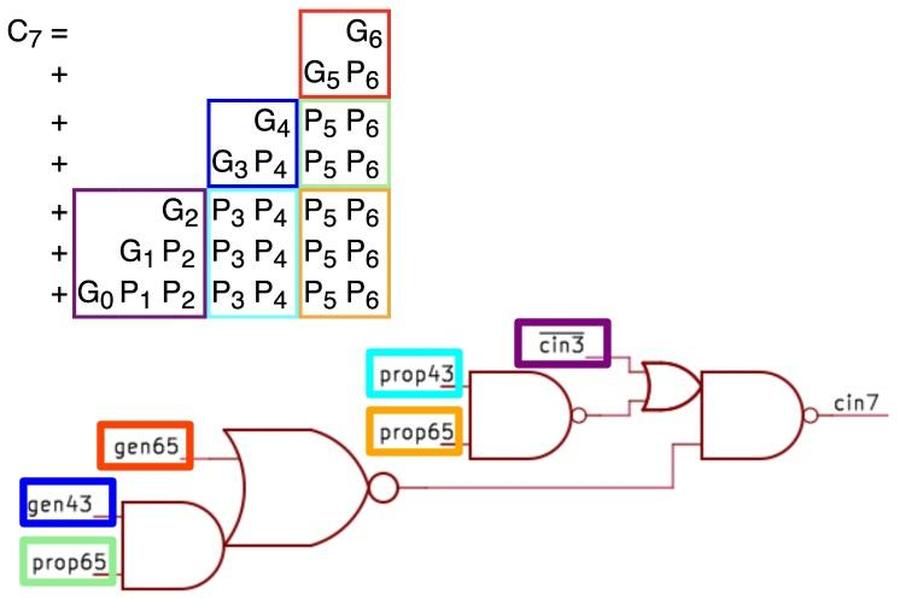

Next, I'll show how the diagram above, the logic equations, and the schematic are related. The diagram below shows the logic equation for C7 and how it is implemented with gates; this corresponds to the green diamonds above. The gates on the left below computes G63; this corresponds to the middle green diamond on the left. The next gate below computes P63 from P65 and P43; this corresponds to the same green diamond. The last gates mix in C3 (the purple line above); this corresponds to the bottom green diamond. As you can see, the diamonds abstract away the complexity of the gates. Finally, the colored boxes below show how the gate inputs map onto the logic equation. Each input corresponds to multiple terms in the equation (6 inputs replace 28 terms), showing how this approach reduces the circuitry required.

There are alternatives to the Kogge-Stone adder. For example, a Brent-Kung adder (below) uses a different arrangement with fewer diamonds but more layers. Thus, a Brent-Kung adder uses less circuitry but is slower. (You can follow each output upward to verify that the tree reaches the correct inputs.)

Conclusions

The photo below shows the adder circuitry. I've removed the top two layers of metal, leaving the bottom layer of metal. Underneath the metal, polysilicon wiring and doped silicon regions are barely visible; they form the transistors. At the top are eight blocks of gates to generate the partial sum, generate, and propagate signals for each bit. (This corresponds to the first layer of circuitry as described earlier.) In the middle is the carry lookahead circuitry. It is irregular since each bit has different circuitry. (This corresponds to the second and third layers of circuitry, jumbled together.) At the bottom, eight XOR gates combine the carry lookahead output with the partial sum to produce the adder's output. (This corresponds to the fourth layer of circuitry.)

The Pentium uses many adders for different purposes: in the integer unit, in the floating point unit, and for address calculation, among others. Floating-point division is known to use a carry-save adder to hold the partial remainder at each step; see my post on the Pentium FDIV division bug for details. I don't know what types of adders are used in other parts of the chip, but maybe I'll reverse-engineer some of them. Follow me on Bluesky (@righto.com) or RSS for updates. (I'm no longer on Twitter.)

Footnotes and references

-

Strangely, the original paper by Kogge and Stone had nothing to do with addition and carries. Their 1973 paper was titled, "A Parallel Algorithm for the Efficient Solution of a General Class of Recurrence Equations." It described how to solve recurrence problems on parallel computers, in particular the massively parallel ILLIAC IV. As far as I can tell, it wasn't until 1987 that their algorithm was applied to carry lookahead, in Fast Area-Efficient VLSI Adders. ↩

-

I'm a bit puzzled why the circuit uses an 8-bit carry-lookahead adder since only 7 bits are used. Moreover, the carry-out is unused. However, the adder's bottom output bit is not connected to anything. Perhaps the 8-bit adder was a standard logic block at Intel and was used as-is. ↩

-

I probably won't make a separate blog post on the testing circuitry, so I'll put details in this footnote. Half of the circuitry in the adder block is used to test the lookup table. The reason is that a chip such as the Pentium is very difficult to test: if one out of 3.1 million transistors goes bad, how do you detect it? For a simple processor like the 8080, you can run through the instruction set and be fairly confident that any problem would turn up. But with a complex chip, it is almost impossible to come up with an instruction sequence that would test every bit of the microcode ROM, every bit of the cache, and so forth. Starting with the 386, Intel added circuitry to the processor solely to make testing easier; about 2.7% of the transistors in the 386 were for testing.

To test a ROM inside the processor, Intel added circuitry to scan the entire ROM and checksum its contents. Specifically, a pseudo-random number generator runs through each address, while another circuit computes a checksum of the ROM output, forming a "signature" word. At the end, if the signature word has the right value, the ROM is almost certainly correct. But if there is even a single bit error, the checksum will be wrong and the chip will be rejected. The pseudo-random numbers and the checksum are both implemented with linear feedback shift registers (LFSR), a shift register along with a few XOR gates to feed the output back to the input. For more information on testing circuitry in the 386, see Design and Test of the 80386, written by Pat Gelsinger, who became Intel's CEO years later. Even with the test circuitry, 48% of the transistor sites in the 386 were untested. The instruction-level test suite to test the remaining circuitry took almost 800,000 clock cycles to run. The overhead of the test circuitry was about 10% more transistors in the blocks that were tested.

In the Pentium, the circuitry to test the lookup table PLA is just below the 7-bit adder. An 11-bit LFSR creates the 11-bit input value to the lookup table. A 13-bit LFSR hashes the two-bit quotient result from the PLA, forming a 13-bit checksum. The checksum is fed serially to test circuitry elsewhere in the chip, where it is merged with other test data and written to a register. If the register is 0 at the end, all the tests pass. In particular, if the checksum is correct, you can be 99.99% sure that the lookup table is operating as expected. The ironic thing is that this test circuit was useless for the FDIV bug: it ensured that the lookup table held the intended values, but the intended values were wrong.

Why did Intel generate test addresses with a pseudo-random sequence instead of a sequential counter? It turns out that a linear feedback shift register (LFSR) is slightly more compact than a counter. This LFSR trick was also used in a touch-tone chip and the program counter of the Texas Instruments TMS 1000 microcontroller (1974). In the TMS 1000, the program counter steps through the program pseudo-randomly rather than sequentially. The program is shuffled appropriately in the ROM to counteract the sequence, so the program executes as expected and a few transistors are saved.

Block diagram of the testing circuitry. -

The bits

1+1will set generate, but should propagate be set too? It doesn't make a difference as far as the equations. This adder sets propagate for1+1but some other adders do not. The answer depends on if you use an inclusive-or or exclusive-or gate to produce the propagate signal. ↩ -

One solution is to implement the carry-lookahead circuit in blocks of four. This can be scaled up with a second level of carry-lookahead to provide the carry lookahead across each group of four blocks. A third level can provide carry lookahead for groups of four second-level blocks, and so forth. This approach requires O(log(N)) levels for N-bit addition. This approach is used by the venerable 74181 ALU, a chip used by many minicomputers in the 1970s; I reverse-engineered the 74181 here. The 74182 chip provides carry lookahead for the higher levels. ↩

-

I won't go into the mathematics of merging P and G signals; see, for example, Adder Circuits, Adders, or Carry Lookahead Adders for additional details. The important factor is that the carry merge operator is associative (actually a monoid), so the sub-ranges can be merged in any order. This flexibility is what allows different algorithms with different tradeoffs. ↩

-

The idea behind a prefix adder is that we want to see if there is a carry out of bit 0, bits 0-1, bits 0-2, bits 0-3, 0-4, and so forth. These are all the prefixes of the word. Since the prefixes are computed in parallel, it's called a parallel prefix adder. ↩

4 comments:

What do the others adders in the Pentium looks like ? Like in the integer ALU or the FPU ? I suppose the integer ALU is not really and adder but something more elaborated, but for the FPU ADD instruction, what's the circuit ?

Also, i'm surprised the ALU takes more place than the decoder+microcode. The decoder+microcode must be implemented very efficiently.

A 13-bit checksum means there is 8192 possible values (that indeed tell you if the ROM is valid with a 99.99% confidence). Intel probably sold millions of those. Even if 1% of them were defective, it's still a lot. I wonder if there was cases of CPUs with a detective ROM being undetected as the checksum would by chance appear to be valid.

The 13 bit checksum means that if there is an error (that affects the results in a predictable way) it is 99.99% likely that it changes the check sum so that you see it. I would assume that single bit errors are even more likely to change it. Now you have to combine this with the probability of having an error in this part of the chip in the first place which should be pretty low, so I would say that the test serves to further reduce the probability of selling a defective chip by a factor of 10000 or so.

@Toivo Henningson : thanks for pointing that out. My initial comment expected all bits of the ROM to be wrong (with some chance of checksum the same as when ROM is valid). For a small ROM of a few KB there is indeed lot of possible states. In reality, only a few bits of the ROM will be invalid, so chance you got the right checksum out of it is really low.

Post a Comment